Web Scraping With Python: Static, Dynamic, and Real Examples

Summarize: ChatGPT Perplexity

Summarize:

ChatGPT Perplexity

{ "@context": "https://schema.org", "@type": "HowTo", "name": "Web Scraping with Python: Full Tutorial With Several Examples", "description": "Step-by-step guide for scraping static and dynamic websites with Python using requests, aiohttp, HTTPX, Beautiful Soup, Playwright, Selenium, and Scrapy.", "step": [ { "@type": "HowToStep", "name": "Understand static vs dynamic web scraping", "text": "Learn the difference between scraping static HTML pages and dynamic, JavaScript-driven sites." }, { "@type": "HowToStep", "name": "Set up your Python environment", "text": "Install Python 3+, pip, and preferred code editor. Create a project folder and set up a virtual environment." }, { "@type": "HowToStep", "name": "Install essential scraping libraries", "text": "Use pip to install requests, beautifulsoup4, lxml, playwright, selenium, aiohttp, httpx, and scrapy as needed." }, { "@type": "HowToStep", "name": "Download page HTML from static sites", "text": "Use requests, httpx, or aiohttp to fetch the HTML document of your target web page (e.g., quotes.toscrape.com)." }, { "@type": "HowToStep", "name": "Parse HTML with a Python parser", "text": "Use Beautiful Soup or PyQuery to turn raw HTML into a navigable structure for data selection." }, { "@type": "HowToStep", "name": "Extract data from selected HTML nodes", "text": "Identify target elements using the correct selector (like .quote), extract the data, and structure it in a Python list of dicts." }, { "@type": "HowToStep", "name": "Scrape dynamic pages and render JavaScript", "text": "Install and use Playwright or Selenium to launch browsers, navigate to dynamic pages, wait for content, and extract fully rendered data." }, { "@type": "HowToStep", "name": "Export scraped data to CSV or JSON", "text": "Use Python’s csv or json library to export your list of dictionaries to quotes.csv or quotes.json." }, { "@type": "HowToStep", "name": "Implement pagination and crawling", "text": "Detect and follow 'Next' page links in a loop, scraping each page in sequence and appending the results." }, { "@type": "HowToStep", "name": "Try an all-in-one scraping framework (Scrapy)", "text": "Install Scrapy, start a project, define a spider, write parsing logic, and use Scrapy’s commands to run and export the data." }, { "@type": "HowToStep", "name": "Handle common web scraping challenges", "text": "Address issues like anti-scraping blocks, IP bans, JavaScript, CAPTCHA, and ethics using proxies or advanced tools from providers like Bright Data." }, { "@type": "HowToStep", "name": "Follow further reading for advanced scraping", "text": "Check recommended tutorials for using proxies, bot detection, advanced browser automation, and ethical data collection." } ], "estimatedCost": { "@type": "MonetaryAmount", "currency": "USD", "value": "Free" }, "supply": [ { "@type": "HowToSupply", "name": "Python 3+ environment" }, { "@type": "HowToSupply", "name": "pip Python package manager" }, { "@type": "HowToSupply", "name": "Text editor or IDE" } ], "tool": [ { "@type": "HowToTool", "name": "requests library" }, { "@type": "HowToTool", "name": "beautifulsoup4" }, { "@type": "HowToTool", "name": "Playwright" }, { "@type": "HowToTool", "name": "Selenium" }, { "@type": "HowToTool", "name": "aiohttp" }, { "@type": "HowToTool", "name": "httpx" }, { "@type": "HowToTool", "name": "Scrapy" }, { "@type": "HowToTool", "name": "CSV module" }, { "@type": "HowToTool", "name": "JSON module" } ], "totalTime": "PT2H" }

In this tutorial, you will learn:

Why web scraping is often done in Python and why this programming language is great for the task.

The difference between scraping static and dynamic sites in Python.

How to set up a Python web scraper project.

What is required to scrape static sites in Python.

How to download the HTML of web pages using various Python HTTP clients.

How to parse HTML with popular Python HTML parsers.

What you need to scrape dynamic sites in Python.

How to implement the data extraction logic for web scraping in Python using different tools.

How to export scraped data to CSV and JSON.

Complete Python web scraping examples using Requests + Beautiful Soup, Playwright, and Selenium.

A step-by-step section on scraping all data from a paginated site.

The unique approach to web scraping that Scrapy offers.

How to handle common web scraping challenges in Python.

Let’s dive in!

What Is Web Scraping in Python?

Web scraping is the process of extracting data from websites, typically using automated tools. In the realm of Python, performing web scraping means writing a Python script that automatically retrieves data from one or more web pages across one or more sites.

Python is one of the most popular programming languages for web scraping . That is because of its widespread adoption and strong ecosystem. In detail, it offers a long list of powerful scraping libraries .

If you are interested in exploring nearly all web scraping tools in Python, take a look at our dedicated Python Web Scraping GitHub repository .

Now, the web scraping process in Python can be outlined in these four steps:

Connect to the target page.

Parse its HTML content.

Implement the data extraction logic to locate the HTML elements of interest and extract the desired data from them.

Export the scraped data to a more accessible format, such as CSV or JSON.

The specific technologies you need to use for the above steps, as well as the techniques to apply, depend on whether the web page is static or dynamic. So, let’s explore that next.

Python Web Scraping: Static vs Dynamic Sites

In web scraping, the biggest factor that determines how you should build your scraping bot is whether the target site is static or dynamic.

For static sites, the HTML documents returned by the server already contain all (or most) of the data you want. These pages might still use JavaScript for some minor client-side interactions. Still, the content you receive from the server is essentially the complete page you see in your browser.

An example of a static site. What you see in the browser is what you get from the server

In contrast, dynamic sites rely heavily on JavaScript to load and/or render data in the browser. The initial HTML document returned by the server often contains very little actual data. Instead, data is fetched and rendered by JavaScript either on the first page load or after user interactions (such as infinite scrolling or dynamic pagination ).

An example of a dynamic site. Note the dynamic data loading

For more details, see our guide on static vs dynamic content for web scraping .

As you can imagine, these two scenarios are very different and require separate Python scraping stacks. In the next chapters of this tutorial, you will learn how to scrape both static and dynamic sites in Python. By the end, you will also find complete, real-world examples.

Web Scraping Python Project Setup

No matter whether your target site has static or dynamic pages, you need a Python project set up to scrape it. So, see how to prepare your environment for web scraping with Python.

Prerequisites

To build a Python web scraper, you need the following prerequisites:

Python 3+ installed locally

pip

installed locally

Note

:

pip

comes bundled with Python starting from version 3.4 (released in 2014), so you do not have to install it separately.

Keep in mind that most systems already come with Python preinstalled. You can verify your installation by running:

python3 --versionOr on some systems:

python --versionYou should see output similar to:

Python 3.13.1If you get a “command not found” error, it means Python is not installed. In that case, download it from the official Python website and follow the installation instructions for your operating system.

While not strictly required, a Python code editor or IDE makes development easier. We recommend:

Visual Studio Code with the Python extension

PyCharm (the free Community Edition should be fine)

These tools will equip you with syntax highlighting, linting, debugging, and other tools to make writing Python scrapers much smoother.

Note : To follow along with this tutorial, you should also have a basic understanding of how the web works and how CSS selectors function .

Project Setup

Open the terminal and start by creating a folder for your Python scraping project, then move into it:

mkdir python-web-scraper

cd python-web-scraperNext, create a Python virtual environment inside this folder:

python -m venv venvActivate the virtual environment. On Linux or macOS, run:

source venv/bin/activateEquivalently, on Windows, execute:

venv\Scripts\activateWith your virtual environment activated, you can now install all required packages for web scraping locally.

Now, open this project folder in your favorite Python IDE. Then, create a new file named

scraper.py

. This is where you will write your Python code for fetching and parsing web data.

Your Python web scraping project directory should now look like this:

python-web-scraper/

├── venv/ # Your virtual environment

└── scraper.py # Your Python scraping scriptAmazing! You are all set to start coding your Python scraper.

Scraping Static Sites in Python

When dealing with static web pages, the HTML documents returned by the server already contain all the data you want to scrape. So, in these scenarios, your two specific steps to keep in mind are:

Use an HTTP client to retrieve the HTML document of the page, replicating the request the browser makes to the web server.

Use an HTML parser to process the content of that HTML document and prepare to extract the data from it.

Then, you will need to extract the specific data and export it to a user-friendly format. At a high level, these operations are the same for both static and dynamic sites. So, we will focus on them later.

Thus, with static sites, you typically combine:

A Python HTTP client to download the web page.

A Python HTML parser to parse the HTML structure, navigate it, and extract data from it.

As a sample static site, from now on, we will refer to the Quotes to Scrape page:

The target static site

This is a simple static web page designed for practicing web scraping. You can confirm it is static by right-clicking on the page in your browser and selecting the “View page source” option. This is what you should get:

The HTML of the target static page

What you see is the original HTML document returned by the server, before it is rendered in the browser. Note that it already contains all the quotes data shown on the page.

In the next three chapters, you will learn:

How to use a Python HTTP client to download the HTML document of this static page.

How to parse it with an HTML parser.

How to perform both steps together in a dedicated scraping framework.

This way, you will be ready to build a complete Python scraper for static sites.

Downloading the HTML Document of the Target Page

Python offers several HTTP clients, but the three most popular ones are:

requests

: A simple, elegant HTTP library for Python that makes sending HTTP requests incredibly straightforward. It is synchronous, widely adopted, and great for most small to medium scraping tasks.

httpx

: A next-generation HTTP client that builds on

requests

ideas but adds support for both synchronous and asynchronous usage, HTTP/2, and connection pooling.

aiohttp

: An asynchronous HTTP client (and server) framework built for

asyncio

. It is ideal for high-concurrency scraping scenarios where you want to

run multiple requests in parallel

.

Discover how they compare in our comparison of Requests vs HTTPX vs AIOHTTP .

Next, you will see how to install these libraries and use them to perform the HTTP GET request to retrieve the HTML document of the target page.

Requests

Install Requests with:

pip install requestsUse it to retrieve the HTML of the target web page with:

import requests

url = "http://quotes.toscrape.com/"

response = requests.get(url)

html = response.text

# HTML parsing logic...

The

get()

method makes the HTTP GET request to the specified URL. The web server will respond with the HTML document of the page.

Further reading :

Master Python HTTP Requests: Advanced Guide

Python Requests User Agent Guide: Setting and Changing

Guide to Using a Proxy with Python Requests

HTTPX

Install HTTPX with:

pip install httpxUtilize it to get the HTML of the target page like this:

import httpx

url = "http://quotes.toscrape.com/"

response = httpx.get(url)

html = response.text

# HTML parsing logic...As you can see, for this simple scenario, the API is the same as in Requests. The main advantage of HTTPX over Requests is that it also offers async support .

Further reading :

Web Scraping With HTTPX and Python

How to Use HTTPX with Proxies: A Complete Guide

AIOHTTP

Install AIOHTTP with:

pip install aiohttpAdopt it to asynchronously connect to the destination URL with:

import asyncio

import aiohttp

async def main():

async with aiohttp.ClientSession() as session:

url = "http://quotes.toscrape.com/"

async with session.get(url) as response:

html = await response.text()

# HTML parsing logic...

asyncio.run(main())

The above snippet creates an asynchronous HTTP session with AIOHTTP. Then it uses it to send a GET request to the given URL, awaiting the response from the server. Note the use of the

async with

blocks to guarantee proper opening and closing of the async resources.

AIOHTTP operates asynchronously by default, requiring the import and use of

asyncio

from the Python standard library.

Further reading :

Asynchronous Web Scraping With AIOHTTP in Python

How to Set Proxy in AIOHTTP

Parsing HTML With Python

Right now, the

html

variable only contains the raw text of the HTML document returned by the server. You can verify that by printing it:

print(html)You will get an output like this in your terminal:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Quotes to Scrape</title>

<link rel="stylesheet" href="/static/bootstrap.min.css">

<link rel="stylesheet" href="/static/main.css">

</head>

<body>

<!-- omitted for brevity... -->If you want to programmatically select HTML nodes and extract data from them, you must parse this HTML string into a navigable DOM structure .

The most popular HTML parsing library in Python is Beautiful Soup . This package sits on top of an HTML or XML parser and makes it easy to scrape information from web pages. It exposes Pythonic methods for iterating, searching, and modifying the parse tree.

Another, less common but still powerful option is PyQuery . This offers a jQuery-like syntax for parsing and querying HTML.

In the next two chapters, you will explore how to transform the HTML string into a parsed tree structure. The actual logic for extracting specific data from this tree will be presented later.

Beautiful Soup

First, install Beautiful Soup :

pip install beautifulsoup4Then, use it to parse the HTML like this:

from bs4 import BeautifulSoup

# HTML retrieval logic...

soup = BeautifulSoup(html, "html.parser")

# Data extraction logic...

In the above snippet,

"html.parser"

is the name of the underlying parser that Beautiful Soup uses to parse the

html

string. Specifically,

html.parser

is the default HTML parser included in the Python standard library.

For better performance, it is best to use

lxml

instead. You can install both Beautiful Soup and lxml with:

pip install beautifulsoup4 lxmlThen, update the parsing logic like so:

soup = BeautifulSoup(html, "lmxl")

At this point,

soup

is a parsed DOM-like tree structure that you can navigate using

Beautiful Soup’s API

to find tags, extract text, read attributes, and more.

Further reading :

BeautifulSoup Web Scraping Guide

PyQuery

Install PyQuery with pip:

pip install pyqueryUse it to parse HTML as follows:

from pyquery import PyQuery as pq

# HTML retrieval logic...

d = pq(html)

# Data extraction logic...

d

is a

PyQuery

object containing the parsed DOM-like tree. You can apply CSS selectors to navigate it and chain methods utilizing a jQuery-like syntax.

Scraping Dynamic Sites in Python

When dealing with dynamic web pages, the HTML document returned by the server is often just a minimal skeleton. That includes a lot of JavaScript, which the browser executes to fetch data and dynamically build or update the page content.

Since only browsers can fully render dynamic pages, you will need to rely on them for scraping dynamic sites in Python . In particular, you must use browser automation tools. These expose an API that lets you programmatically control a web browser.

In this case, web scraping usually boils down to:

Instructing the browser to visit the page of interest.

Waiting for the dynamic content to load and/or optionally simulating user interactions.

Extracting the data from the fully rendered page.

Now, browser automation tools tend to control browsers in headless mode , meaning the browser operates without the GUI. This saves a lot of resources, which is important considering how resource-intensive most browsers are.

This time, your target will be the dynamic version of the Quotes to Scrape page:

The target dynamic page

This version retrieves the quotes data via AJAX and renders it dynamically on the page using JavaScript. You can verify that by inspecting the network requests in the DevTools:

The AJAX request made by the page to retrieve the quotes data

Now, the two most widely used browser automation tools in Python are:

Playwright : A modern browser automation library developed by Microsoft. It supports Chromium, Firefox, and WebKit. It is fast, has powerful selectors, and offers great built-in support for waiting on dynamic content.

Selenium : A well-established, widely adopted framework for automating browsers in Python for scraping and testing use cases.

Dig into the two solutions in our comparison of Playwright vs Selenium .

In the next section, you will see how to install and configure these tools. You will utilize them to instruct a controlled Chrome instance to navigate to the target page.

Note : This time, there is no need for a separate HTML parsing step. That is because browser automation tools provide direct APIs for selecting nodes and extracting data from the DOM.

Playwright

To install Playwright , run:

pip install playwrightThen, you need to install all Playwright dependencies (e.g., browser binaries, browser drivers, etc.) with:

python -m playwright installUse Playwright to instruct a headless Chromium instance to connect to the target dynamic page as below:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

# Open a controllable Chromium instance in headless mode

browser = p.chromium.launch(headless=True)

page = browser.new_page()

# Visit the target page

url = "http://quotes.toscrape.com/scroll"

page.goto(url)

# Data extraction logic...

# Data export logic...

# Close the browser and release its resources

browser.close()

This opens a headless Chromium browser and navigates to the page using the

goto()

method.

Further reading :

A Guide to Playwright Web Scraping

Avoid Bot Detection With Playwright Stealth

Selenium

Install Selenium with:

pip install seleniumNote : In the past, you also needed to manually install a browser driver (e.g., ChromeDriver to control Chromium browsers). However, with the latest versions of Selenium ( 4.6 and above ), this is no longer required. Selenium now automatically manages the appropriate driver for your installed browser. All you need is to have Google Chrome installed locally.

Harness Selenium to connect to the dynamic web page like this:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

# Launch a Chrome instance in headless mode

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options)

# Visit the target page

url = "http://quotes.toscrape.com/scroll"

driver.get(url)

# Data extraction logic...

# Data export logic...

# Close the browser and clean up resources

driver.quit()

Compared to Playwright, with Selenium, you must explicitly set headless mode using

Chrome CLI flags

. Also, the method to tell the browser to navigate to a URL is

get()

.

Further reading :

Guide to Web Scraping With Selenium

Selenium User Agent Guide: Setting and Changing

Web Scraping With Selenium Wire in Python

Implement the Python Web Data Parsing Logic

In the previous steps, you learned how to parse/render the HTML of static/dynamic pages, respectively. Now it is time to see how to actually scrape data from that HTML.

The first step is to get familiar with the HTML of your target page. Particularly, focus on the elements that contain the data you want. In this case, assume you want to scrape all the quotes (text and author) from the target page.

So, open the page in your browser, right-click on a quote element, and select “Inspect” option:

The quote HTML elements

Notice how each quote is wrapped in an HTML element with the

.quote

CSS class.

Next, expand the HTML of a single quote:

The HTML of a single quote element

You will see that:

The quote text is inside a

.text

HTML element.

The author name is inside an

.author

HTML node.

Since the page contains multiple quotes, you will also need a data structure to store them. A simple list will work well:

quotes = []In short, your overall data extraction plan is:

Select all

.quote

elements on the page.

Iterate over them, and for each quote:

Extract the quote text from the

.text

node

Extract the author name from the

.author

node

Create a new dictionary with the scraped quote and author

Append it to the

quotes

list

Extract the quote text from the

.text

node

Extract the author name from the

.author

node

Create a new dictionary with the scraped quote and author

Append it to the

quotes

list

Now, let’s see how to implement the above Python web data extraction logic using Beautiful Soup, PyQuery, Playwright, and Selenium.

Beautiful Soup

Implement the data extraction logic in Beautiful Soup with:

# HTML retrieval logic...

# Where to store the scraped data

quotes = []

# Select all quote HTML elements on the page

quote_elements = soup.select(".quote")

for quote_element in quote_elements:

# Extract the quote text

text_element = quote_element.select_one(".text")

text = text_element.get_text(strip=True)

# Extract the author name

author_element = quote_element.select_one(".author")

author = author_element.get_text(strip=True)

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Data export logic...

The above snippet calls the

select()

method to find all HTML elements matching the given CSS selector. Then, for each of those elements, it selects the specific data node with

select_one()

. This operates just like

select()

but limits the result to a single node.

Next, it extracts the content of the current node with

get_text()

. With the scraped data, it builds a dictionary and appends it to the

quotes

list.

Note that the same results could have been achieved also with

find_all()

and

find()

(as you will see later on in the step-by-step section).

PyQuery

Write the data extraction code using PyQuery as follows:

# HTML retrieval logic...

# Where to store the scraped data

quotes = []

# Select all quote HTML elements on the page

quote_elements = d(".quote")

for quote_element in quote_elements:

# Wrap the element in PyQuery again to use its methods

q = d(quote_element)

# Extract the quote text

text = q(".text").text().strip()

# Extract the author name

author = q(".author").text().strip()

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Data export logic...Notice how similar the syntax is to jQuery.

Playwright

Build the Python data extraction logic in Playwright with:

# Page visiting logic...

# Where to store the scraped data

quotes = []

# Select all quote HTML elements on the page

quote_elements = page.locator(".quote")

# Wait for the quote elements to appear on the page

quote_elements.first.wait_for()

# Iterate over each quote element

for quote_element in quote_elements.all():

# Extract the quote text

text_element = quote_element.locator(".text")

text = text_element.text_content().strip()

# Extract quote author

author_element = quote_element.locator(".author")

author = author_element.text_content().strip()

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Data export logic...In this case, remember that you are working with a dynamic page. That means the quote elements might not be rendered immediately when you apply the CSS selector (because they are loaded dynamically via JavaScript).

Playwright implements an auto-wait mechanism for most locator actions, but this does not apply to the

all()

method. Therefore, you need to manually wait for the quote elements to appear on the page using

wait_for()

before calling

all()

.

wait_for()

automatically waits for up to 30 seconds.

Note

:

wait_for()

must be called on a single locator to avoid violating the

Playwright strict mode

. That is why you must first access a single locator with

.first

.

Selenium

This is how to extract the data using Selenium:

# Page visiting logic...

# Where to store the scraped data

quotes = []

# Wait for the quote elements to appear on the page (up to 30 seconds by default)

wait = WebDriverWait(driver, 30)

quote_elements = wait.until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, ".quote"))

)

# Iterate over each quote element

for quote_element in quote_elements:

# Extract the quote text

text_element = quote_element.find_element(By.CSS_SELECTOR, ".text")

text = text_element.text.strip()

# Extract the author name

author_element = quote_element.find_element(By.CSS_SELECTOR, ".author")

author = author_element.text.strip()

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Data export logic...

This time, you can wait for the quote elements to appear on the page using

Selenium’s expected conditions mechanism

. This employs

WebDriverWait

together with

presence_of_all_elements_located()

to wait until all elements matching the

.quote

selector are present in the DOM.

Note that the above code requires the extra three imports:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECExport the Scraped Data

Currently, you have the scraped data stored in a

quotes

list. To complete a typical Python web scraping workflow, the final step is to export this data to a more accessible format like CSV or JSON.

See how to do both in Python!

Export to CSV

Export the scraped data to CSV with:

import csv

# Python scraping logic...

with open("quotes.csv", mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=["text", "author"])

writer.writeheader()

writer.writerows(quotes)

This uses Python’s built-in

csv

library to write your

quotes

list into an output file called

quotes.csv

. The file will include column headers named

text

and

author

.

Export to JSON

Export the scraped quotes data to a JSON file with:

import json

# Python scraping logic...

with open("quotes.json", mode="w", encoding="utf-8") as file:

json.dump(quotes, file, indent=4, ensure_ascii=False)

This produces a

quotes.json

with the JSON-formatted

quotes

list.

Complete Python Web Scraping Examples

You now have all the building blocks needed for web scraping in Python. The last step is simply to put it all together inside your

scraper.py

file within your Python project.

Note : If you prefer a more guided approach, skip to the next chapter.

Below, you will find complete examples using the most common scraping stacks in Python. To run any of them, install the required libraries, copy the code into

scraper.py

, and launch it with:

python3 scraper.pyOr, equivalently, on Windows and other systems:

python scraper.py

After running the script, you will see either a

quotes.csv

file or a

quotes.json

file appear in your project folder.

quotes.csv

will look like this:

The quotes.csv output file

While

quotes.json

will contain:

The quotes.json output file

Time to check out the complete web scraping Python examples!

Requests + Beautiful Soup

# pip install requests beautifulsoup4 lxml

import requests

from bs4 import BeautifulSoup

import csv

# Retrieve the HTML of the target page

url = "http://quotes.toscrape.com/"

response = requests.get(url)

html = response.text

# Parse the HTML

soup = BeautifulSoup(html, "lxml")

# Where to store the scraped data

quotes = []

# Select all quote HTML elements on the page

quote_elements = soup.select(".quote")

for quote_element in quote_elements:

# Extract the quote text

text_element = quote_element.select_one(".text")

text = text_element.get_text(strip=True)

# Extract the author name

author_element = quote_element.select_one(".author")

author = author_element.get_text(strip=True)

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Export the scraped data to CSV

with open("quotes.csv", mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=["text", "author"])

writer.writeheader()

writer.writerows(quotes)Playwight

# pip install playwright

# python -m install playwright install

from playwright.sync_api import sync_playwright

import json

with sync_playwright() as p:

# Open a controllable Chromium instance in headless mode

browser = p.chromium.launch(headless=True)

page = browser.new_page()

# Visit the target page

url = "http://quotes.toscrape.com/scroll"

page.goto(url)

# Where to store the scraped data

quotes = []

# Select all quote HTML elements on the page

quote_elements = page.locator(".quote")

# Wait for the quote elements to appear on the page

quote_elements.first.wait_for()

# Iterate over each quote element

for quote_element in quote_elements.all():

# Extract the quote text

text_element = quote_element.locator(".text")

text = text_element.text_content().strip()

# Extract quote author

author_element = quote_element.locator(".author")

author = author_element.text_content().strip()

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Export the scraped data to JSON

with open("quotes.json", mode="w", encoding="utf-8") as file:

json.dump(quotes, file, indent=4, ensure_ascii=False)

# Close the browser and release its resources

browser.close()Selenium

# pip install selenium

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import csv

# Launch a Chrome instance in headless mode

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options)

# Visit the target page

url = "http://quotes.toscrape.com/scroll"

driver.get(url)

# Where to store the scraped data

quotes = []

# Wait for the quote elements to appear on the page (up to 30 seconds by default)

wait = WebDriverWait(driver, 30)

quote_elements = wait.until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, ".quote"))

)

# Iterate over each quote element

for quote_element in quote_elements:

# Extract the quote text

text_element = quote_element.find_element(By.CSS_SELECTOR, ".text")

text = text_element.text.strip()

# Extract the author name

author_element = quote_element.find_element(By.CSS_SELECTOR, ".author")

author = author_element.text.strip()

# Populate a new quote dictionary with the scraped data

quote = {

"text": text,

"author": author

}

# Append to the list

quotes.append(quote)

# Export the scraped data to CSV

with open("quotes.csv", mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=["text", "author"])

writer.writeheader()

writer.writerows(quotes)

# Close the browser and clean up resources

driver.quit()Build a Web Scraper in Python: Step-By-Step Guide

For a more complete, guided approach, follow this section to build a web scraper in Python using Requests and Beautiful Soup.

The goal is to show you how to extract all quote data from the target site, navigating through each pagination page. For each quote, you will scrape the text, author, and list of tags. Finally, you will see how to export the scraped data to a CSV file.

Step #1: Connect to the Target URL

We will assume you already have a Python project set up. In an activated virtual environment, install the required libraries using:

pip install requests beautifulsoup4 lxml

Also, your

scraper.py

file should already include the necessary imports:

import requests

from bs4 import BeautifulSoup

The first thing to do in a web scraper is to connect to your target website. Use

requests

to download a web page with the following line of code:

page = requests.get("https://quotes.toscrape.com")

The

page.text

contains the HTML document returned by the server in string format. Time to feed the

text

property to Beautiful Soup to parse the HTML content of the web page!

Step #2: Parse the HTML content

Pass

page.text

to the

BeautifulSoup()

constructor to parse the HTML document:

soup = BeautifulSoup(page.text, "lxml")You can now use it to select the desired HTML element from the page. See how!

Step #3: Define the Node Selection Logic

To extract data from a web page, you must first identify the HTML elements of interest. In particular, you must define a selection strategy for the elements that contain the data you want to scrape.

You can achieve that by using the development tools offered by your browser. In Chrome, right-click on the HTML element of interest and select the “Inspect” option. In this case, do that on a quote element:

As you can see here, the quote

<div>

HTML node is identified by

.quote

selector. Each quote node contains:

The quote text in a

<span>

you can select with

.text

.

The author of the quote in a

<small>

you can select with

.author

A list of tags in a

<div>

element, each contained in

<a>

. You can select them all with

.tags .tag

.

Wonderful! Get ready to implement the Python scraping logic.

Step #4: Extract Data from the Quote Elements

First, you need a data structure to keep track of the scraped data. For this reason, initialize an array variable:

quotes = []

Then, use

soup

to extract the quote elements from the DOM by applying the

.quote

CSS selector defined earlier.

Here, we will use Beautiful Soup’s

find()

and

find_all()

methods to introduce a different approach from what we have explored so far:

find()

: Returns the first HTML element that matches the input selector strategy, if any.

find_all()

: Returns a list of HTML elements matching the selector condition passed as a parameter.

Select all quote elements with:

quote_elements = soup.find_all("div", class_="quote")

The

find_all()

method will return the list of all

<div>

HTML elements identified by the

quote

class. Iterate over the

quotes

list and collect the quote data as below:

for quote_element in quote_elements:

# Extract the text of the quote

text = quote_element.find("span", class_="text").get_text(strip=True)

# Extract the author of the quote

author = quote_element.find("small", class_="author").get_text(strip=True)

# Extract the tag <a> HTML elements related to the quote

tag_elements = quote_element.select(".tags .tag")

# Store the list of tag strings in a list

tags = []

for tag_element in tag_elements:

tags.append(tag_element.get_text(strip=True))

The Beautiful Soup

find()

method will retrieve the single HTML element of interest. Since the tag strings associated with the quote are more than one, you should store them in a list.

Then, you can transform the scraped data into a dictionary and append it to the

quotes

list as follows:

quotes.append(

{

"text": text,

"author": author,

"tags": ", ".join(tags) # Merge the tags into a "A, B, ..., Z" string

}

)Great! You just saw how to extract all quote data from a single page.

Yet, keep in mind that the target website consists of several web pages. Learn how to crawl the entire website!

Step #5: Implement the Crawling Logic

At the bottom of the home page, you can find a “Next →”

<a>

HTML element that redirects to the next page:

The “Next →” element

This HTML element is contained on all but the last page. Such a scenario is common in any paginated website. By following the link contained in the “Next →” element, you can easily navigate the entire website.

So, start from the home page and see how to go through each page that the target website consists of. All you have to do is look for the

.next

<li>

HTML element and extract the relative link to the next page.

Implement the crawling logic as follows:

# The URL of the home page of the target website

base_url = "https://quotes.toscrape.com"

# Retrieve the page and initializing soup...

# Get the "Next →" HTML element

next_li_element = soup.find("li", class_="next")

# If there is a next page to scrape

while next_li_element is not None:

next_page_relative_url = next_li_element.find("a", href=True)["href"]

# Get the new page

page = requests.get(base_url + next_page_relative_url, headers=headers)

# Parse the new page

soup = BeautifulSoup(page.text, "lxml")

# Scraping logic...

# Look for the "Next →" HTML element in the new page

next_li_element = soup.find("li", class_="next")

The

while

cycle iterates over each page until there is no next page. It extracts the relative URL of the next page and uses it to create the URL of the next page to scrape. Then, it downloads the next page. Finally, it scrapes it and repeats the logic.

Fantastic! You now know how to scrape an entire website. It only remains to learn how to convert the extracted data to a more useful format, such as CSV.

Step #6: Extract the Scraped Data to a CSV File

Export the list of dictionaries containing the scraped quote data to a CSV file:

import csv

# Scraping logic...

# Open (or create) the CSV file and ensure it is properly closed afterward

with open("quotes.csv", "w", encoding="utf-8", newline="") as csv_file:

writer = csv.writer(csv_file)

# Write the header row

writer.writerow(["Text", "Author", "Tags"])

# Write each quote as a row

for quote in quotes:

writer.writerow(quote.values())

What this snippet does is create a CSV file with

open()

. Then, it populates the output file with the

writerow()

function from the

Writer

object of the

csv

library. That function writes each quote dictionary as a CSV-formatted row.

Amazing! You went from raw data contained in a website to semi-structured data stored in a CSV file. The data extraction process is over, and you can now take a look at the entire Python data scraper.

Step #7: Put It All Together

This is what the complete data scraping Python script looks like:

# pip install requests beautifulsoup4 lxml

import requests

from bs4 import BeautifulSoup

import csv

def scrape_page(soup, quotes):

# Retrieve all the quote <div> HTML element on the page

quote_elements = soup.find_all("div", class_="quote")

# Iterate over the list of quote elements and apply the scraping logic

for quote_element in quote_elements:

# Extract the text of the quote

text = quote_element.find("span", class_="text").get_text(strip=True)

# Extract the author of the quote

author = quote_element.find("small", class_="author").get_text(strip=True)

# Extract the tag <a> HTML elements related to the quote

tag_elements = quote_element.select(".tags .tag")

# Store the list of tag strings in a list

tags = []

for tag_element in tag_elements:

tags.append(tag_element.get_text(strip=True))

# Append a dictionary containing the scraped quote data

quotes.append(

{

"text": text,

"author": author,

"tags": ", ".join(tags) # Merge the tags into a "A, B, ..., Z" string

}

)

# The URL of the home page of the target website

base_url = "https://quotes.toscrape.com"

# Retrieve the target web page

page = requests.get(base_url)

# Parse the target web page with Beautiful Soup

soup = BeautifulSoup(page.text, "lxml")

# Where to store the scraped data

quotes = []

# Scrape the home page

scrape_page(soup, quotes)

# Get the "Next →" HTML element

next_li_element = soup.find("li", class_="next")

# If there is a next page to scrape

while next_li_element is not None:

next_page_relative_url = next_li_element.find("a", href=True)["href"]

# Get the new page

page = requests.get(base_url + next_page_relative_url)

# Parse the new page

soup = BeautifulSoup(page.text, "lxml")

# Scrape the new page

scrape_page(soup, quotes)

# Look for the "Next →" HTML element in the new page

next_li_element = soup.find("li", class_="next")

# Open (or create) the CSV file and ensure it is properly closed afterward

with open("quotes.csv", "w", encoding="utf-8", newline="") as csv_file:

writer = csv.writer(csv_file)

# Write the header row

writer.writerow(["Text", "Author", "Tags"])

# Write each quote as a row

for quote in quotes:

writer.writerow(quote.values())As shown here, in less than 80 lines of code, you can build a Python web scraper. This script is able to crawl an entire website, automatically extract all its data, and export it to CSV.

Congrats! You just learned how to perform Python web scraping with Requests and Beautiful Soup.

With the terminal inside the project’s directory, launch the Python script with:

python3 scraper.pyOr, on some systems:

python scraper.py

Wait for the process to end, and you will now have access to a

quotes.csv

file. Open it, and it should contain the following data:

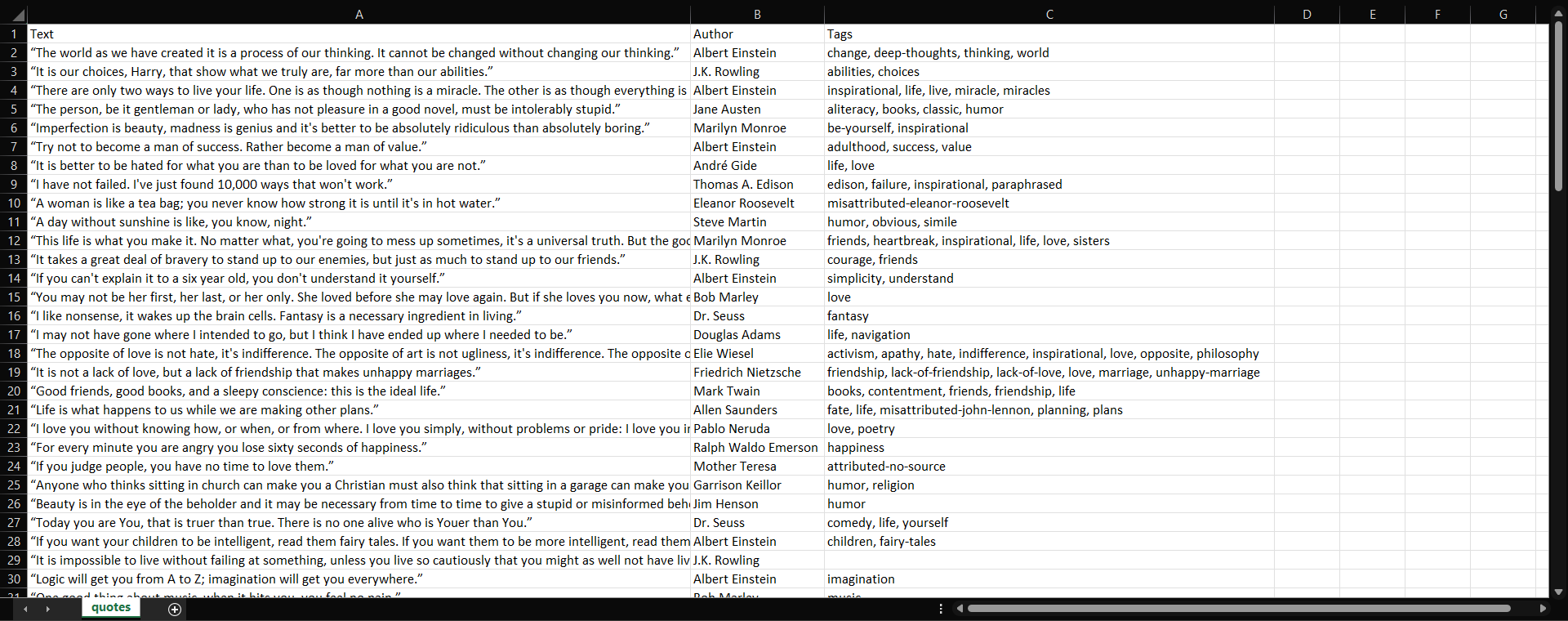

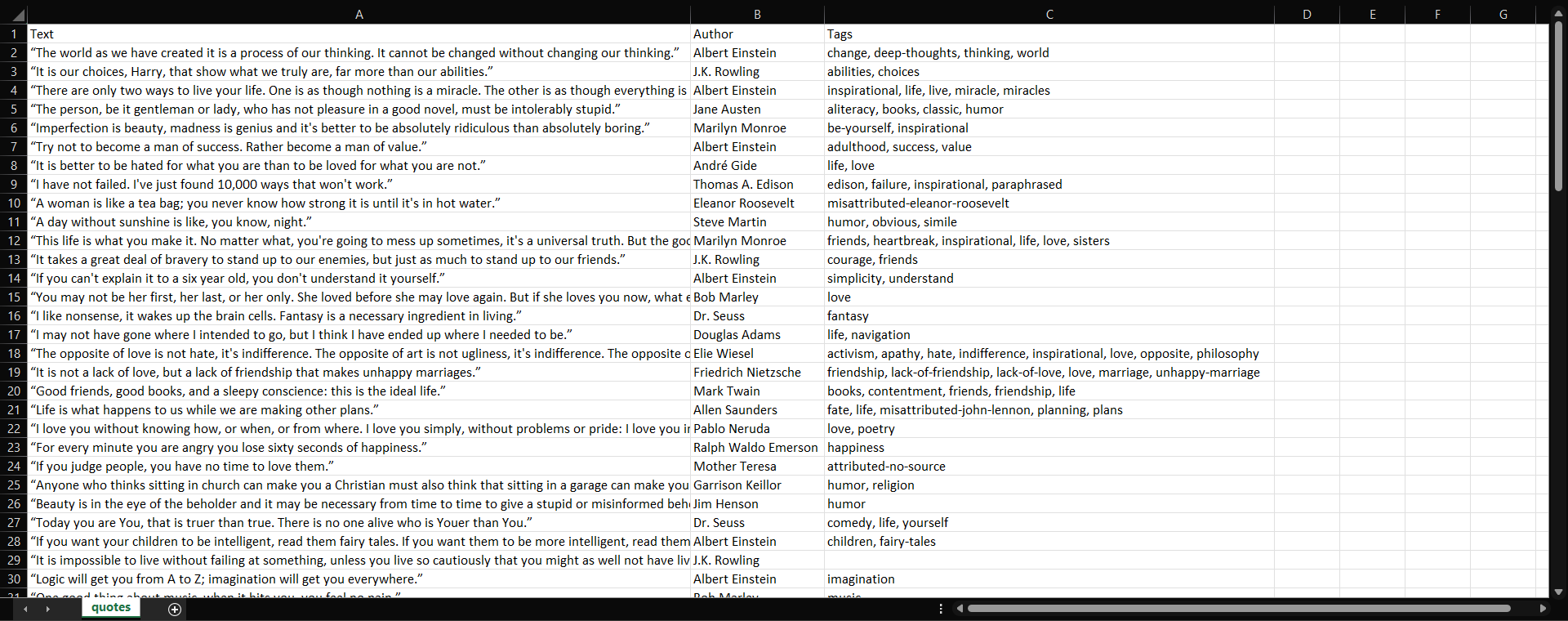

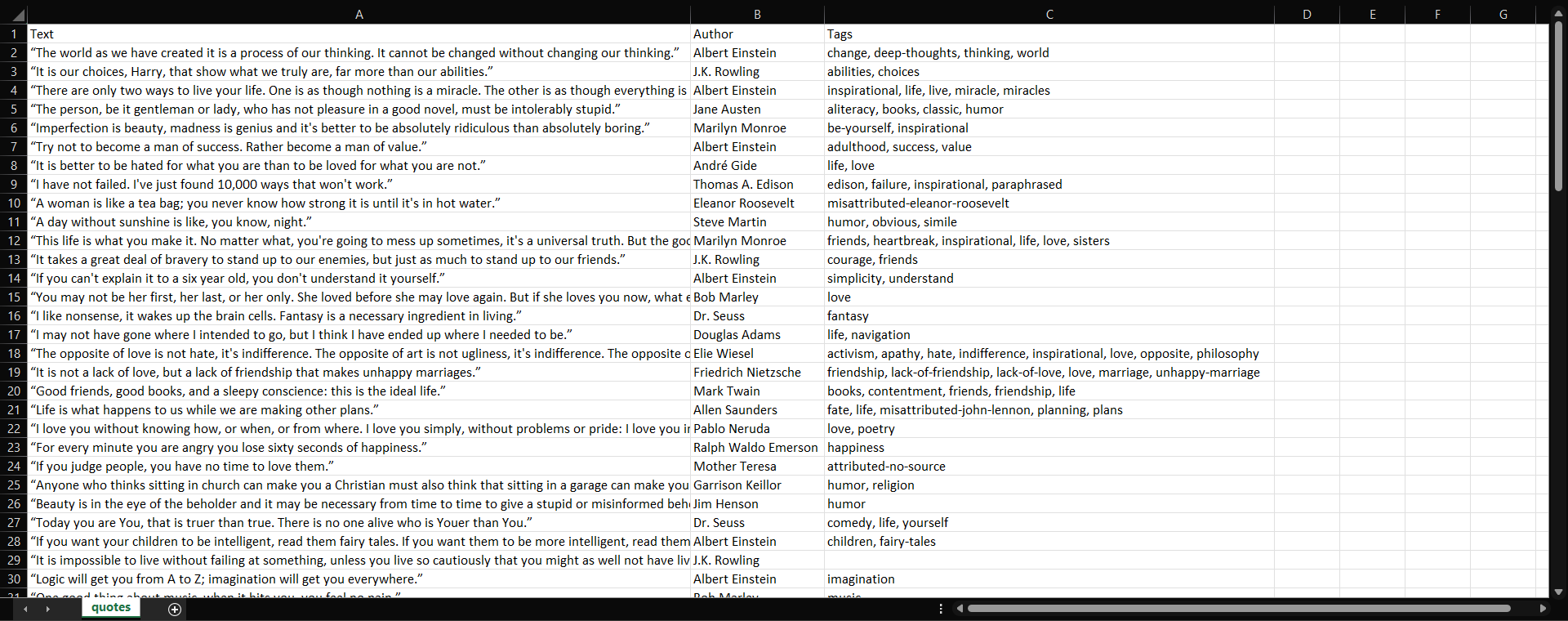

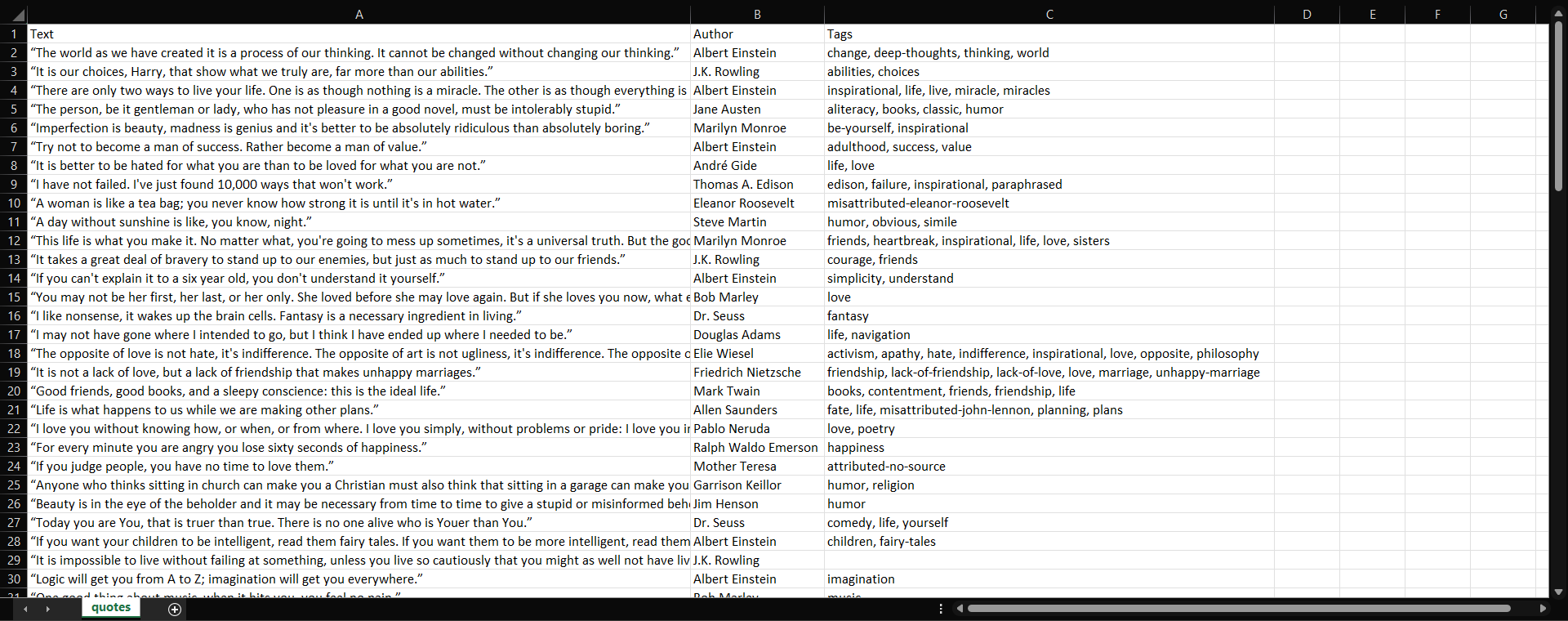

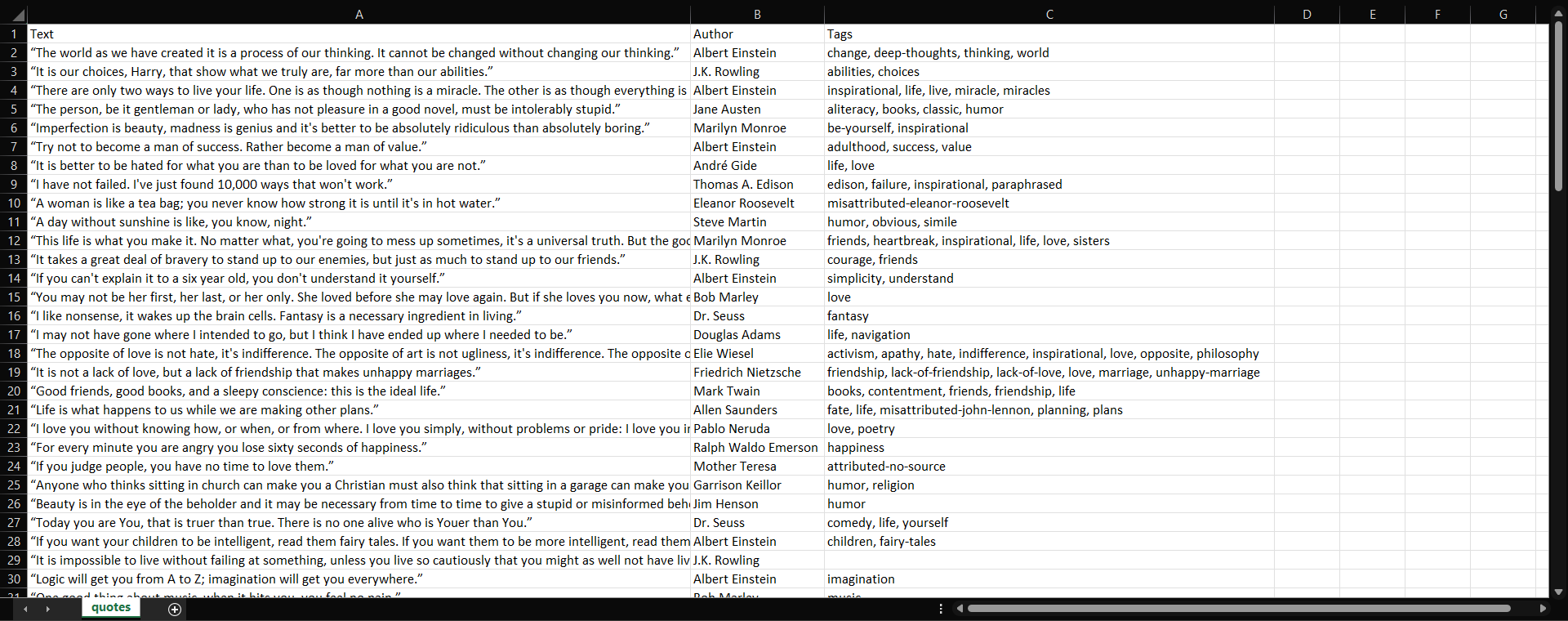

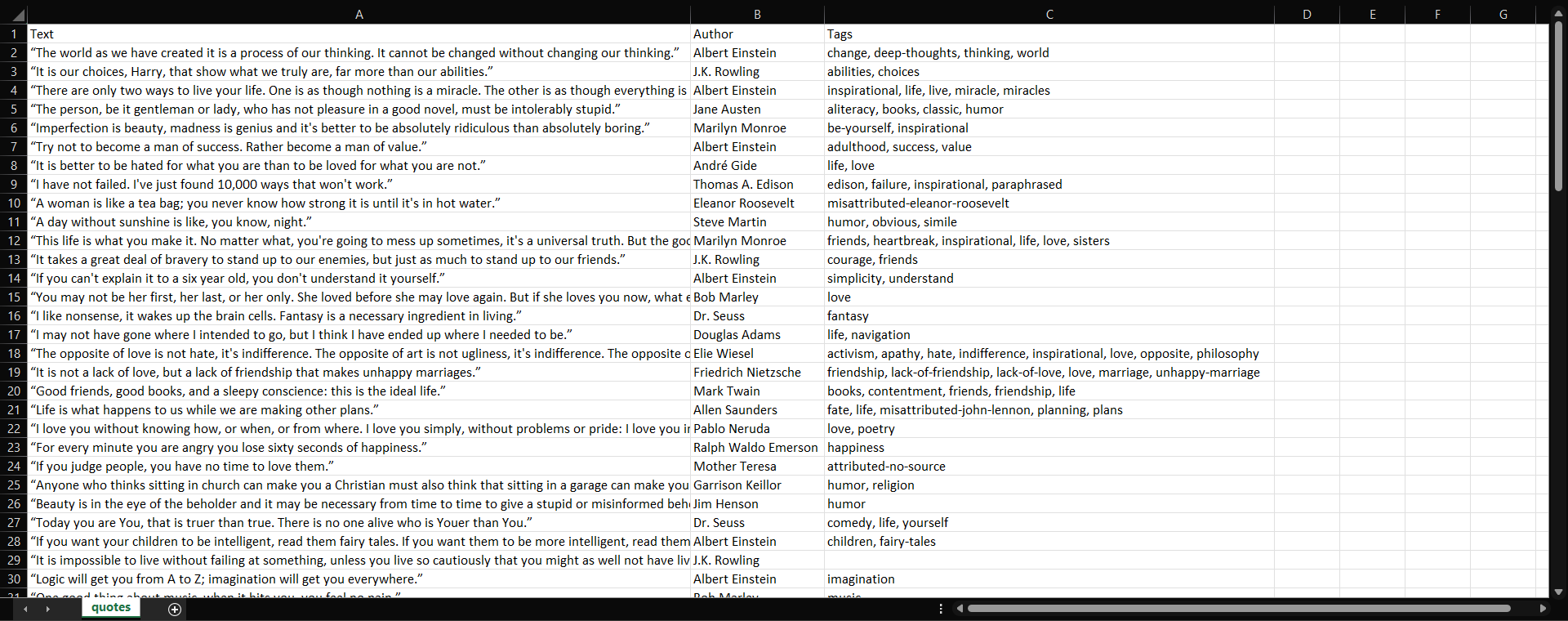

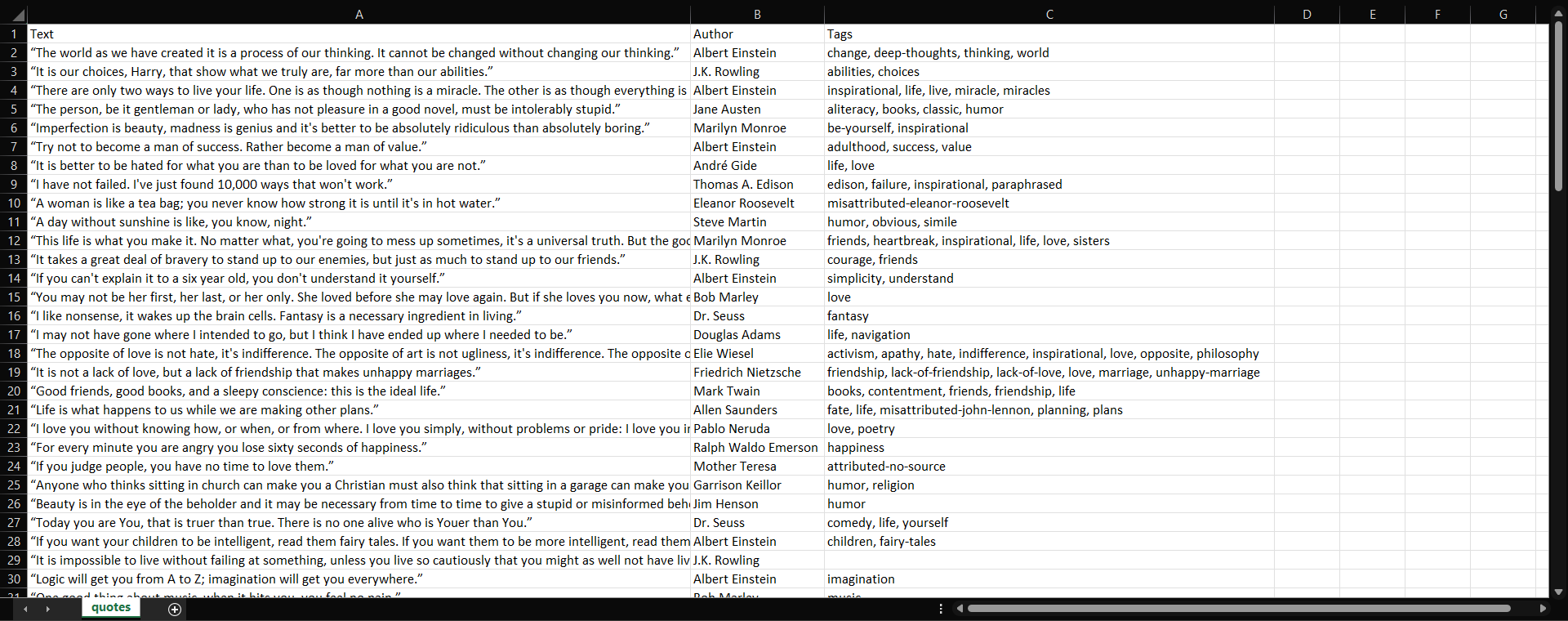

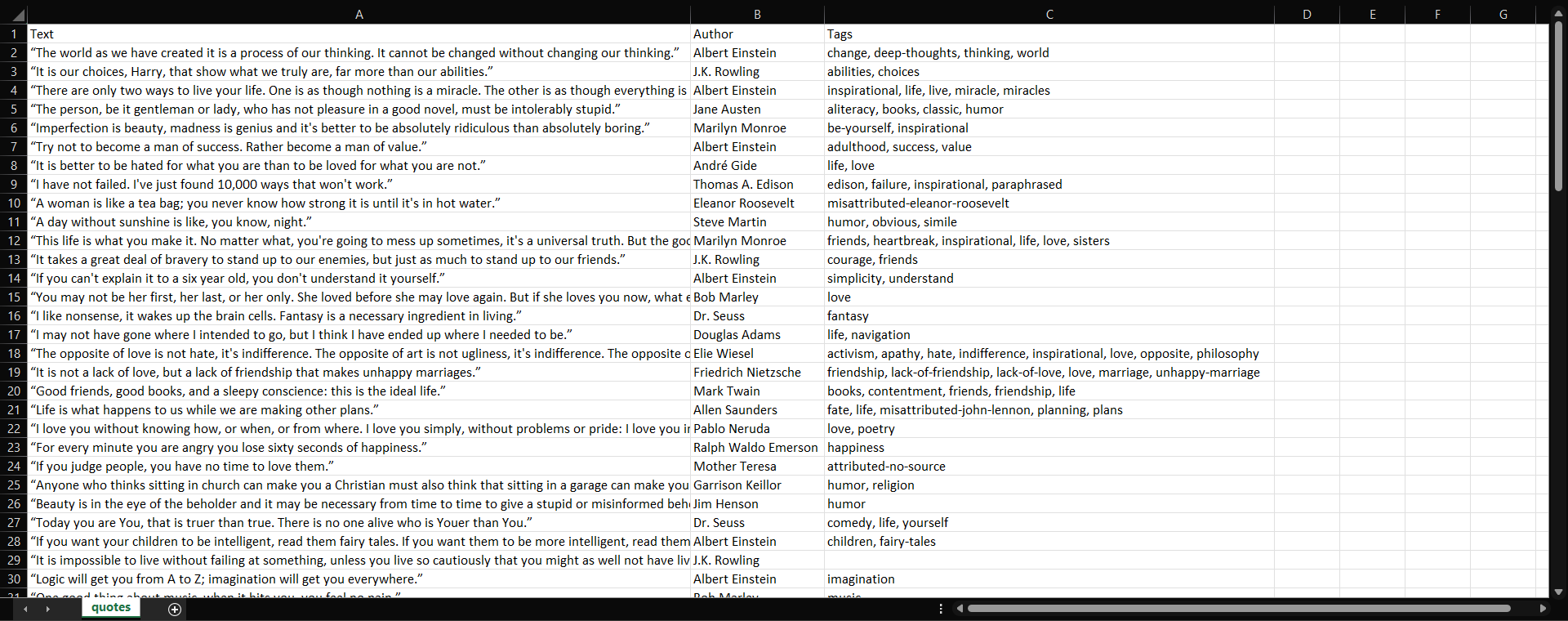

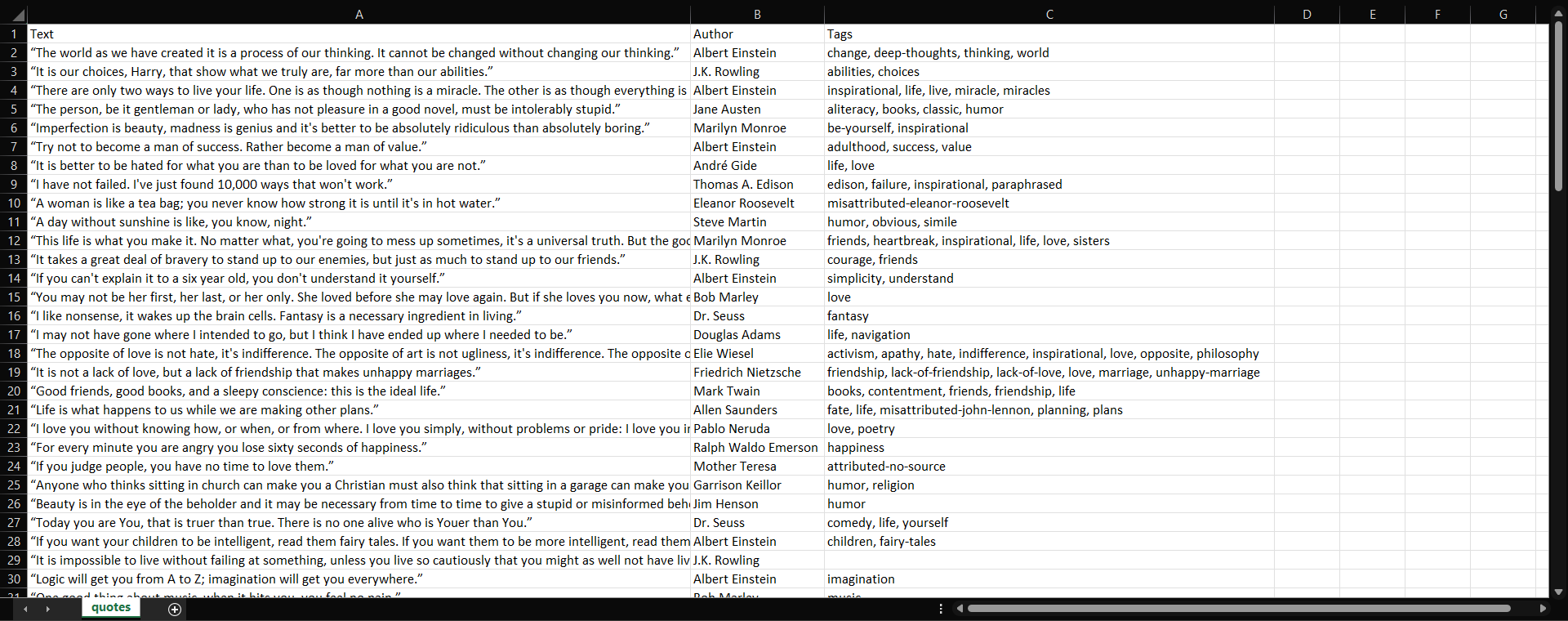

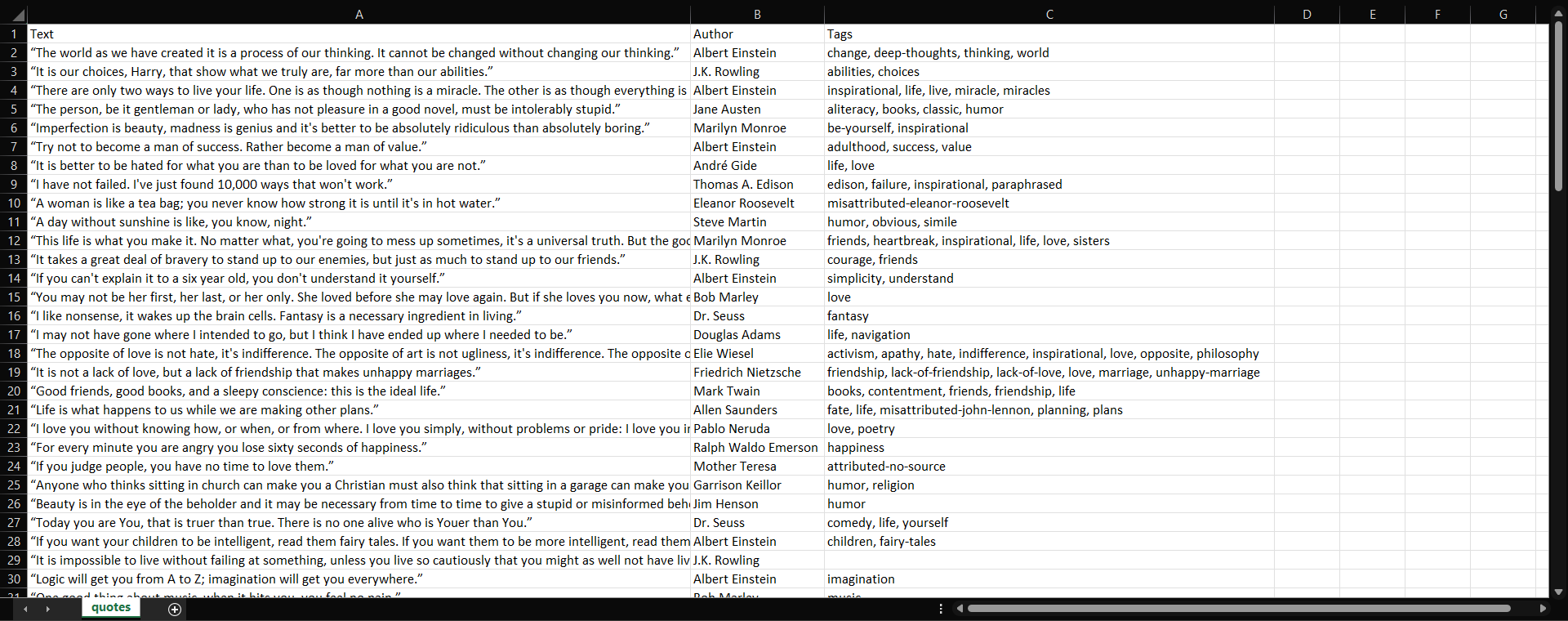

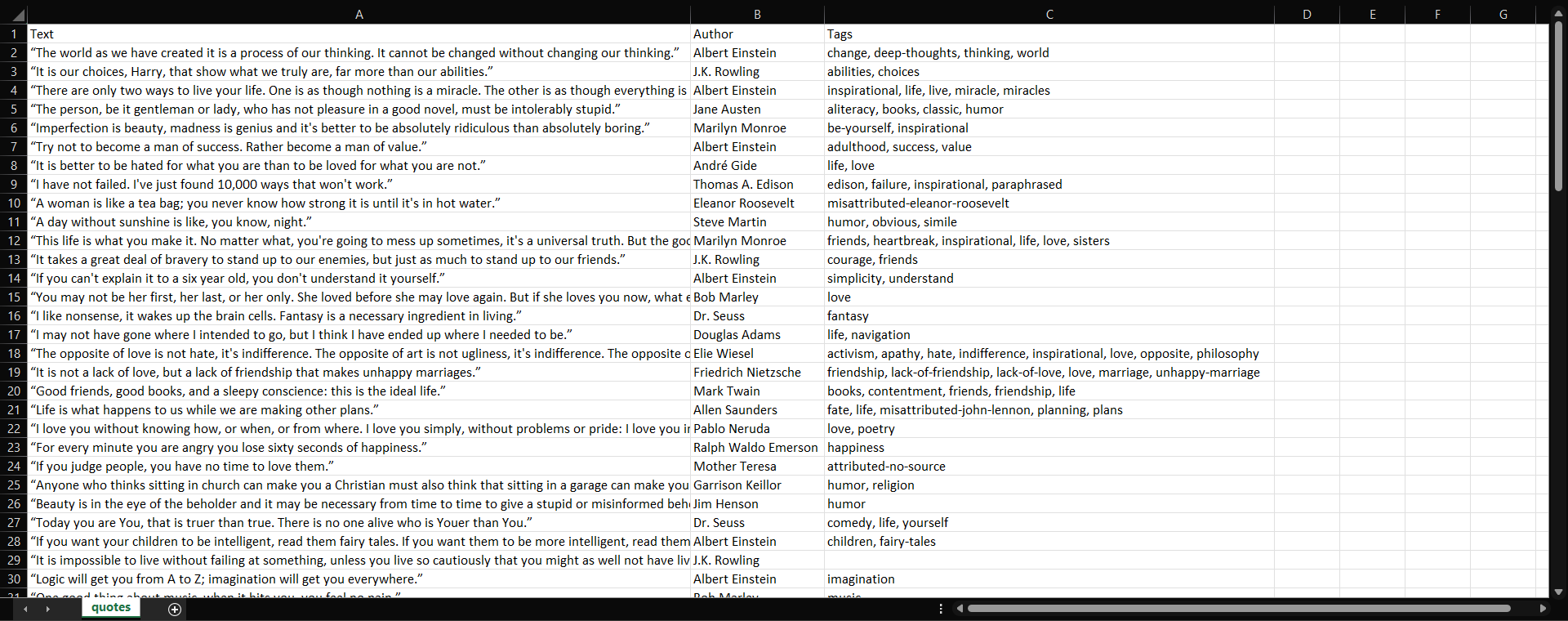

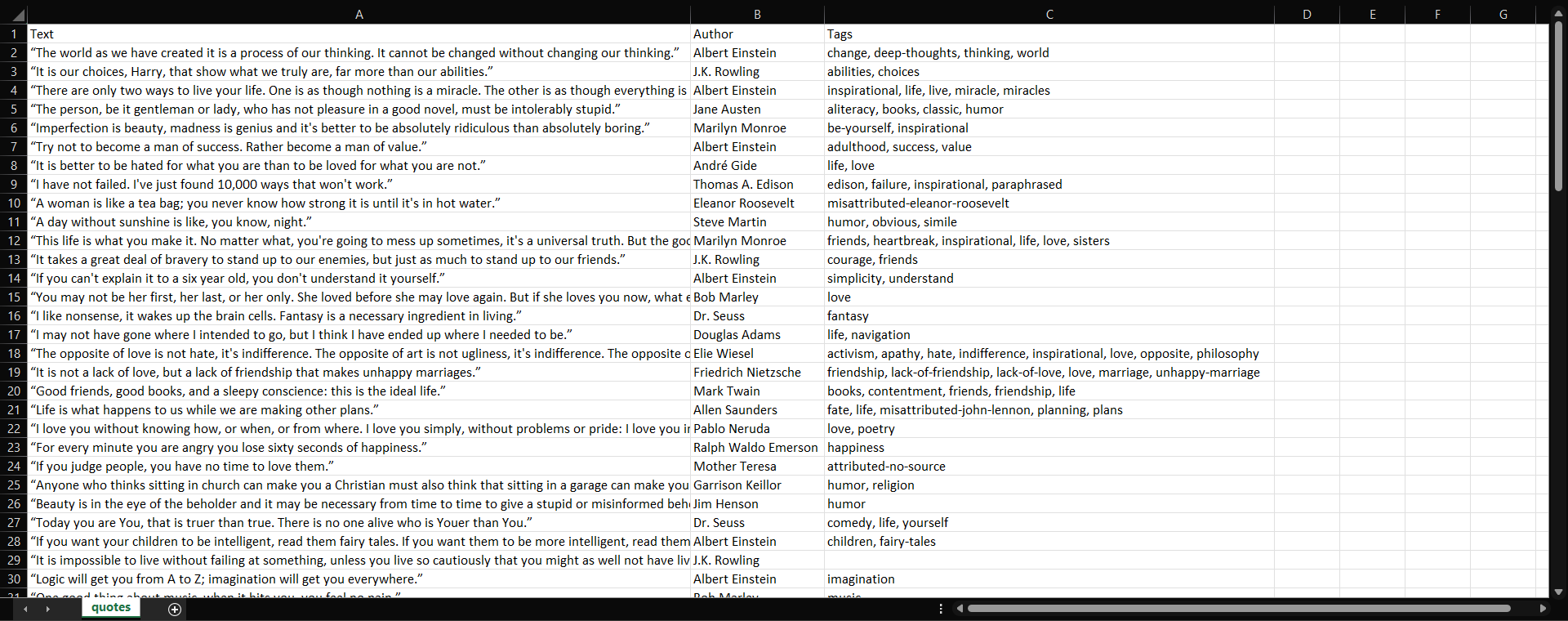

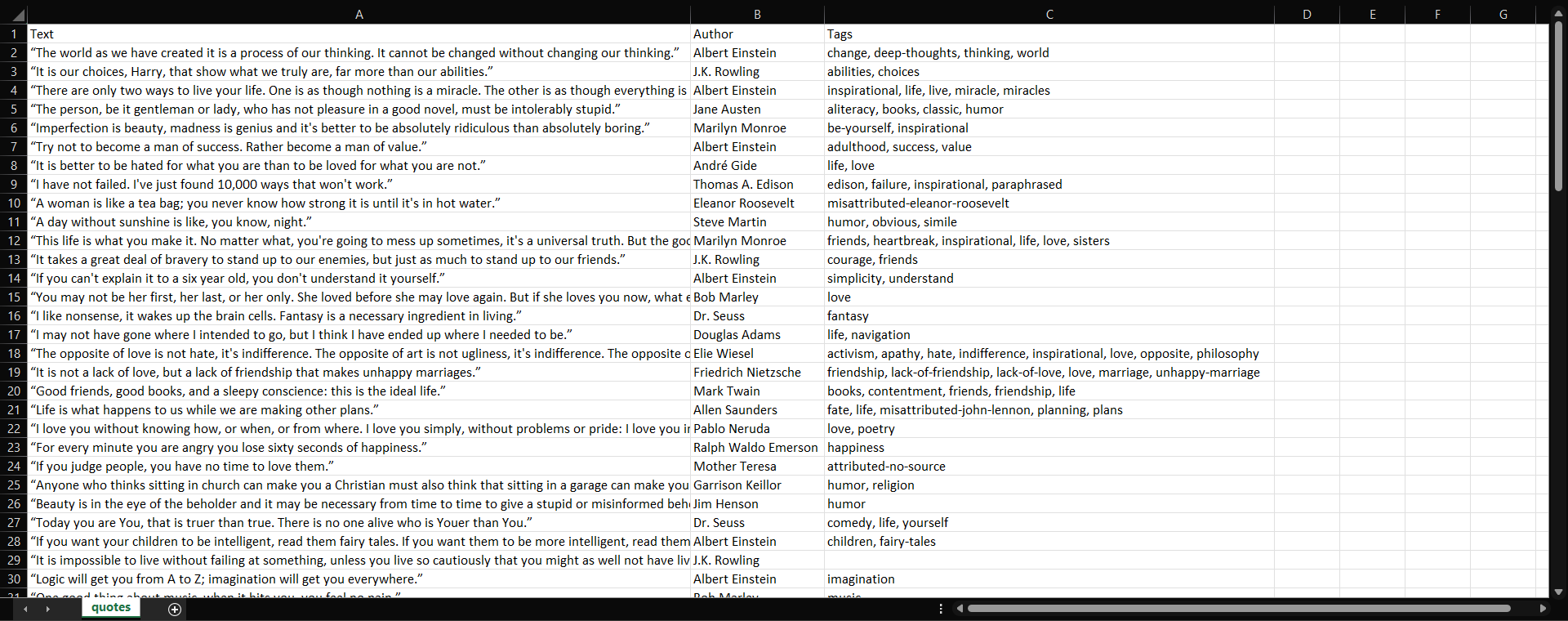

The quotes.csv file with all quotes from the entire site

Et voilà! You now have all 100 quotes contained in the target website in a single file in an easy-to-read format.

Scrapy: The All-in-One Python Web Scraping Framework

The narrative so far has been that to scrape websites in Python, you either need an HTTP client + HTML parser setup or a browser automation tool. However, that is not entirely true. There are dedicated all-in-one scraping frameworks that provide everything you need within a single library.

The most popular scraping framework in Python is Sc r apy . While it primarily works out of the box with static sites, it can be extended to handle dynamic sites using tools like Scrapy Splash or Scrapy Playwright .

In its standard form, Scrapy combines HTTP client capabilities and HTML parsing into one powerful package. In this section, you will learn how to set up a Scrapy project to scrape the static version of Quotes to Scrape.

Install Scrapy with:

pip install scrapyThen, create a new Scrapy project and generate a spider for Quotes to Scrape:

scrapy startproject quotes_scraper

cd quotes_scraper

scrapy genspider quotes quotes.toscrape.com

This command creates a new Scrapy project folder called

quotes_scraper

, moves into it, and generates a spider named

quotes

targeting the site

quotes.toscrape.com

.

If you are not familiar with this procedure, refer to our guide on scraping with Scrapy .

Edit the spider file (

quotes_scraper/spiders/quotes.py

) and add the following scraping Python logic:

# quotes_scraper/spiders/quotes.py

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

# Sites to visit

start_urls = [

"http://quotes.toscrape.com/"

]

def parse(self, response):

# Scraping logic

for quote in response.css(".quote"):

yield {

"text": quote.css(".text::text").get(),

"author": quote.css(".author::text").get(),

"tags": quote.css(".tags .tag::text").getall(),

}

# Pagination handling logic

next_page = response.css("li.next a::attr(href)").get()

if next_page:

yield response.follow(next_page, self.parse)Behind the scenes, Scrapy sends HTTP requests to the target page and uses Parsel (its built-in HTML parser) to extract the data as specified in your spider.

You can now programmatically export the scraped data to CSV with this command:

scrapy crawl quotes -o quotes.csvOr to JSON with:

scrapy crawl quotes -o quotes.jsonThese commands run the spider and automatically save the extracted data into the specified file format.

Further reading :

Scrapy vs Requests for Web Scraping: Which to Choose?

Scrapy vs Beautiful Soup: Detailed Comparison

Scrapy vs Playwright: Web Scraping Comparison Guide

Scrapy vs Selenium for Web Scraping

Scraping Challenges and How to Overcome Them in Python

In this tutorial, you learned how to scrape sites that have been built to make web scraping easy. When applying these techniques to real-world targets, you will encounter many more scraping challenges .

Some of the most common scraping issues and anti-scraping techniques (along with guides to address them) include:

Websites frequently changing their HTML structure, breaking your element selection logic: How to Use AI for Web Scraping : Integrate AI in the data extraction logic via dedicated Python AI scraping libraries to retrieve data via simple prompts. Best AI Web Scraping Tools: Complete Comparison Web Scraping with Gemini AI in Python – Step-by-Step Guide

IP bans and rate limiting stopping your script after too many requests: How to Use Proxies to Rotate IP Addresses in Python : Hide your IP and avoid rate limiters by using a rotating proxy. 10 Best Rotating Proxies: Ultimate Comparison

Fingerprint issues raising suspicion on the target server and triggering blocks, along with common techniques to defend against them:

HTTP Headers for Web Scraping

: Set the right headers in your HTTP client to reduce blocks.

User-Agents For Web Scraping 101

Web Scraping With curl_cffi and Python in 2025

:

curl_cffi

is a special version of cURL designed to avoid TLS fingerprinting issues in Python.

How to Use Undetected ChromeDriver for Web Scraping

: Undetected ChromeDriver is a special version of Selenium optimized to avoid blocks.

Guide to Web Scraping With SeleniumBase in 2025

: SeleniumBase is a customized version of Selenium tweaked to limit detection.

CAPTCHAs and JavaScript challenges blocking access to dynamic web pages: Bypassing CAPTCHAs with Python: Techniques How to Bypass CAPTCHAs With Playwright How to Bypass CAPTCHAs With Selenium in Python

Legal and ethical considerations requiring careful compliance:

Ethical Data Collection

: Practical tools and guidelines to build ethical scrapers.

Robots.txt for Web Scraping Guide

: Respect the

robots.txt

file to perform ethical web scraping in Python.

How to Use AI for Web Scraping : Integrate AI in the data extraction logic via dedicated Python AI scraping libraries to retrieve data via simple prompts.

Best AI Web Scraping Tools: Complete Comparison

Web Scraping with Gemini AI in Python – Step-by-Step Guide

How to Use Proxies to Rotate IP Addresses in Python : Hide your IP and avoid rate limiters by using a rotating proxy.

10 Best Rotating Proxies: Ultimate Comparison

HTTP Headers for Web Scraping : Set the right headers in your HTTP client to reduce blocks.

User-Agents For Web Scraping 101

Web Scraping With curl_cffi and Python in 2025

:

curl_cffi

is a special version of cURL designed to avoid TLS fingerprinting issues in Python.

How to Use Undetected ChromeDriver for Web Scraping : Undetected ChromeDriver is a special version of Selenium optimized to avoid blocks.

Guide to Web Scraping With SeleniumBase in 2025 : SeleniumBase is a customized version of Selenium tweaked to limit detection.

Bypassing CAPTCHAs with Python: Techniques

How to Bypass CAPTCHAs With Playwright

How to Bypass CAPTCHAs With Selenium in Python

Ethical Data Collection : Practical tools and guidelines to build ethical scrapers.

Robots.txt for Web Scraping Guide

: Respect the

robots.txt

file to perform ethical web scraping in Python.

Now, most solutions are based on workarounds that often only work temporarily. This means you need to continually maintain your scraping scripts. Additionally, you may sometimes require access to premium resources, such as high-quality web proxies .

Thus, in production environments or when scraping becomes too complex, it makes sense to rely on a complete web data provider like Bright Data.

Bright Data offers a wide range of services for web scraping, including:

Unlocker API : Automatically solves blocks, CAPTCHAs, and anti-bot challenges to guarantee successful page retrieval at scale.

Crawl API : Parses entire websites into structured AI-ready data.

SERP API : Retrieves real-time search engine results (Google, Bing, more) with geo-targeting, device emulation, and anti-CAPTCHA built in.

Browser API : Launches remote headless browsers with stealth fingerprinting, automating JavaScript-heavy page rendering and complex interactions. It works with Playwright and Selenium.

CAPTCHA Solver : A rapid and automated CAPTCHA solver that can bypass challenges from reCAPTCHA, hCaptcha, px_captcha, SimpleCaptcha, GeeTest CAPTCHA, and more.

Web Scraper APIs : Prebuilt scrapers to extract live data from 100+ top sites such as LinkedIn, Amazon, TikTok, and many others.

Proxy Services : 150M+ IPs from residential proxies, mobile proxies, ISP proxies, and datacenter proxies.

All these solutions integrate seamlessly with Python or any other tech stack. They greatly simplify the implementation of your Python web scraping projects.

Conclusion

In this blog post, you learned what web scraping with Python is, what you need to get started, and how to do it using several tools. You now have all the basics to scrape a site in Python, along with further reading links to help you sharpen your scraping skills.

Keep in mind that web scraping comes with many challenges. Anti-bot and anti-scraping technologies are becoming increasingly common. That is why you might require advanced web scraping solutions, like those provided by Bright Data .

If you are not interested in scraping yourself but just want access to the data, consider exploring our dataset services .

Create a Bright Data account for free and use our solutions to take your Python web scraper to the next level!