Top 13 AI Agent Frameworks in 2025

In this guide, you will:

Understand what AI agent frameworks are

Discover the key factors to consider when evaluating these libraries

Explore the best AI agent frameworks

Compare these tools in a clear summary table

Let’s dive in!

What Is an AI Agent Framework?

AI agent frameworks are tools that simplify the creation, deployment, and management of autonomous AI agents. In this context, an AI agent is a software entity that perceives its environment, processes information, and takes actions to achieve specific goals.

These frameworks offer pre-built components and abstractions to help developers build AI-powered agents— typically using LLMs . They support powerful systems capable of perceiving inputs, processing information, and making decisions.

Key features provided by these tools include agent architecture, memory management, task orchestration, and tool integration.

Aspects to Consider When Selecting the Best Frameworks for Building AI Agents

When comparing the best AI agent frameworks available, here are the main elements to keep into consideration:

Repository : A link to the tool’s codebase, where you can find all relevant information.

Programming language : The language the library is developed in and distributed as a package.

Developed by : The team or company behind the tool.

GitHub stars : The number of stars the repository has received, indicating its popularity.

Features : A list of capabilities offered by the framework.

Supported models : A list of AI models or providers the tool integrates with.

“AI frameworks are the new runtime for intelligent agents, defining how they think, act, and scale. Powering these frameworks with real-time web access and reliable data infrastructure enables developers to build smarter, faster, production-ready AI systems.” — Ariel Shulman , Chief Product Officer, Bright Data

“AI frameworks are the new runtime for intelligent agents, defining how they think, act, and scale. Powering these frameworks with real-time web access and reliable data infrastructure enables developers to build smarter, faster, production-ready AI systems.” — Ariel Shulman , Chief Product Officer, Bright Data

Best AI Agent Frameworks

See the list of the best frameworks for building AI agents on the market, selected based on the criteria presented earlier.

Note : The list below is not a ranking, but rather a collection of the best AI agent frameworks. In detail, each tool is suited for specific use cases and scenarios.

AutoGen

AutoGen

AutoGen is a Microsoft-backed framework for building autonomous or human-assisted multi-agent AI systems. It equips you with flexible APIs, developer tools, and a no-code GUI (AutoGen Studio) to prototype, run, and evaluate AI agents. These agents can cover tasks like web browsing, code execution, chat-based workflows, and more. It natively supports the Python and .NET ecosystems.

🔗 Repository : GitHub

💻 Programming language : Python, .NET

👨💻 Developed by : Microsoft

⭐ GitHub stars : 43.1k+

⚙️ Features :

Cross-language support for Python and .NET

Supports both autonomous and human-in-the-loop agents

GUI support through AutoGen Studio

Layered, extensible architecture for flexibility

Core API, AgentChat API, and Extensions API

Built-in support for web browsing agents via Playwright

Multimodal agents for tasks involving browser automation and user interaction

Round-robin group chat support for orchestrating agent teams

Termination conditions allow agent chats to end based on custom rules

Includes benchmarking tools via AutoGen Bench

Rich ecosystem of tools, packages, and community-contributed agents

🧠 Supported models : OpenAI, Azure OpenAI, Azure AI Foundry, Anthropic (experimental support), Ollama (experimental support), Gemini (experimental support), and Semantic Kernel Adapter

LangChain

LangChain

LangChain is an open-source Python framework for building powerful, production-ready applications and agents using LLMs. It simplifies AI development by letting you chain together modular components and third-party integrations. LangChain helps you move fast and adapt as AI technology evolves thanks to its flexible, future-proof design and extensive ecosystem.

Its set of tools includes LangGraph —a low-level orchestration framework for building controllable, stateful AI agents.

Discover how to integrate web scraping in LangChain workflows .

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : Community

⭐ GitHub stars : 106k+

⚙️ Features :

Possibility to easily swap out language models, data sources, and other components

Ability to connect language models to various data sources via an intuitive high-level API

Tools for refining prompts to guide language models and obtain more precise outputs

Support for developing RAG systems

Memory modules that allow language models to retain information about past interactions

Tools for deploying and monitoring language model applications

High degree of customization and flexibility due to a modular design

High scalability and flexibility

Comprehensive documentation with a variety of examples

🧠 Supported models : OpenAI, Google, Hugging Face, Azure, AWS, Anthropic, and others

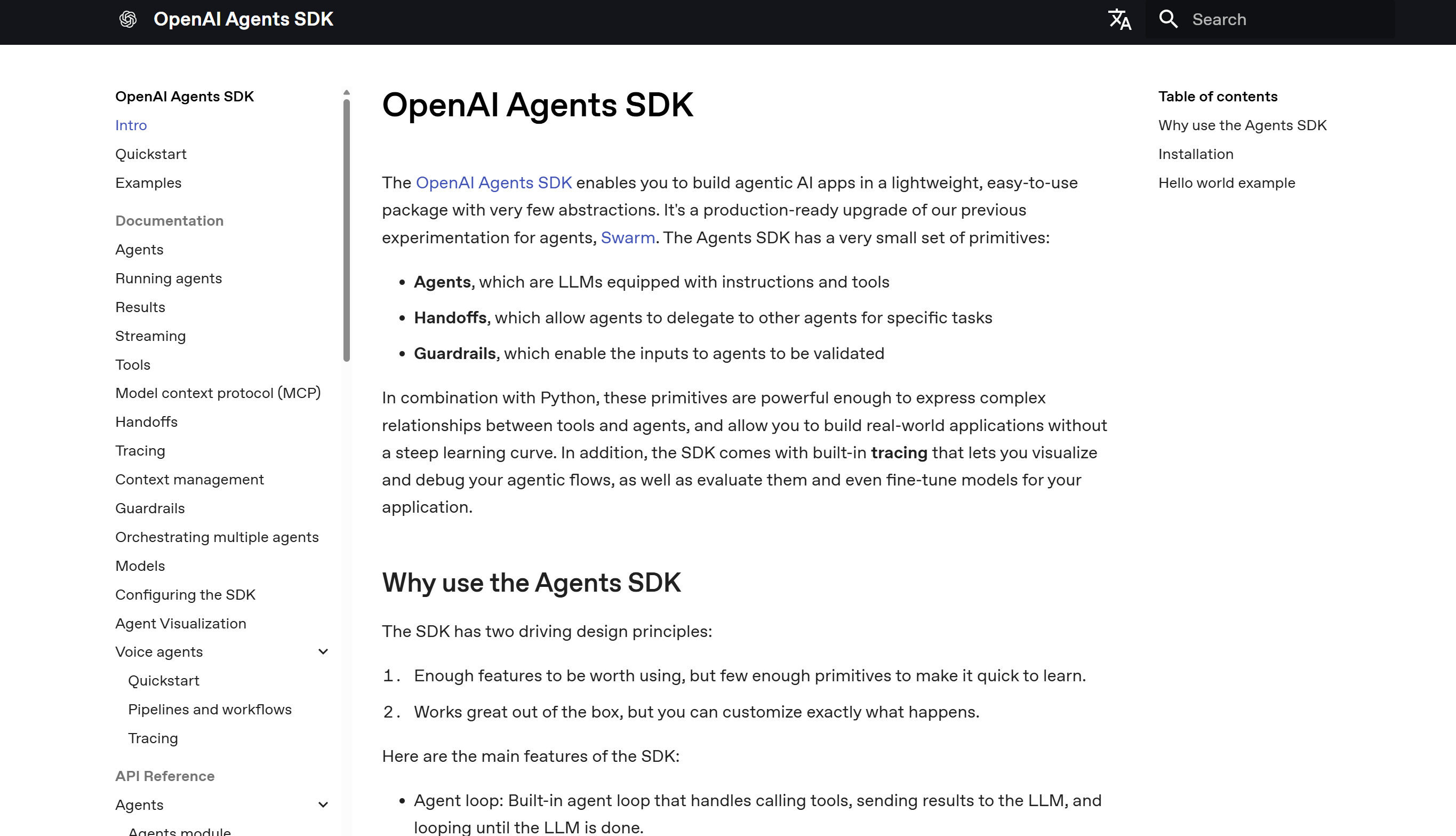

OpenAI Agents SDK

OpenAI Agents SDK

The OpenAI Agents SDK (formerly known as OpenAI Swarm) is a production-ready framework for building multi-agent AI workflows. It provides a minimal set of primitives:

Agents : LLMs equipped with instructions and tools.

Handoffs : To allow agents to delegate to other agents for specific tasks.

Guardrails : To validate the inputs passed to agents.

OpenAI Agents SDK is built with simplicity and flexibility in mind. It supports complex use cases, includes built-in tracing and evaluation, and fully integrates with Python.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : OpenAI

⭐ GitHub stars : 8.6k+

⚙️ Features :

Production-ready and lightweight SDK for building agentic AI apps

Allows agents to delegate tasks to other agents

Guardrails validate agent inputs and enforce constraints

Built-in agent loop handles tool calls, LLM responses, and repetition until completion

Python-first design allows chaining and orchestration using native Python features

Function tools convert Python functions into tools with auto schema and validation

Tracing allows visualization, debugging, and monitoring of agent flows

Supports evaluation, fine-tuning, and distillation using OpenAI tools

Minimal primitives make it quick to learn and easy to customize

🧠 Supported models : OpenAI

Langflow

Langflow

Langflow is a low-code framework for visually building and deploying AI agents and workflows. It supports any API, model, or database, and includes a built-in API server to turn agents into endpoints. Langflow supports major LLMs, vector databases, and offers a growing library of AI tools. All that with no heavy setup needed.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : Community

⭐ GitHub stars : 54.9k+

⚙️ Features :

Possibility to start quickly and iterate using a visual builder

Access to underlying code for customizing any component using Python

Ability to test and refine flows in a step-by-step playground environment

Support for multi-agent orchestration, conversation management, and retrieval

Option to deploy as an API or export flows as JSON for Python applications

Observability through integrations with tools like LangSmith and LangFuse

Enterprise-grade security and scalability for production environments

🧠 Supported models : Amazon Bedrock, Anthropic, Azure OpenAI, Cohere, DeepSeek, Google, Groq, Hugging Face API, IBM Watsonx, LMStudio, Maritalk, Mistral, Novita AI, NVIDIA, Ollama, OpenAI, OpenRouter, Perplexity, Qianfan, SambaNova, VertexAIm, and xAI

LlamaIndex

LlamaIndex (formerly known as GPT Index) is a framework for building LLM-powered agents over your data—built by Meta. It enables the creation of production-grade agents that can search, synthesize, and generate insights from complex enterprise data. It comes with several integrations and plugins for improved functionality.

🔗 Repository : GitHub

💻 Programming language : Python, TypeScript

👨💻 Developed by : Meta

⭐ GitHub stars : 40.9k+

⚙️ Features :

High-level API for quick prototyping

Low-level API for advanced customization of connectors, indices, retrievers, and more

API for building LLM-powered agents and agentic workflows

Supports context augmentation to integrate your private data with LLMs

Built-in tools for data ingestion from PDFs, APIs, SQL, and more

Intermediate data indexing formats optimized for LLM consumption

Pluggable query engines for question-answering through RAG (as demonstrated in our SERP data RAG chatbot guide )

Chat engines for multi-turn interactions over your data

Agent interface for tool-augmented, task-oriented LLM applications

Workflow support for event-driven, multi-step logic with multiple agents and tools

Tools for evaluation and observability of LLM application performance

Built-in support for multimodal apps

Supports self-hosted and managed deployments via LlamaCloud

LlamaParse for state-of-the-art document parsing

🧠 Supported models : AI21, Anthropic, AnyScale, Azure OpenAI, Bedrock, Clarifai, Cohere, Dashscope, Dashscope Multi-Modal, EverlyAI, Fireworks, Friendli, Gradient, Gradient Model Adapter, Groq, HuggingFace, Konko, LangChain, LiteLLM, Llama, LocalAI, MariTalk, MistralAI, Modelscope, MonsterAPI, MyMagic, NeutrinoAI, Nebius AI, NVIDIA, Ollama, OpenAI, OpenLLM, OpenRouter, PaLM, Perplexity, Pipeshift, PremAI, Portkey, Predibase, Replicate, RunGPT, SageMaker, SambaNova Systems, Together.ai, Unify AI, Vertex, vLLM, Xorbits Inference, and Yi

Agno

Agno

Agno is a full-stack, open-source Python framework designed for building advanced multi-agent AI systems. It stands out with its versatile agent memory, state and reasoning management, multi-modal input/output, robust support for agentic search and vector databases, and seamless integration with popular LLMs and toolkits. Agno is focused on scalable, deterministic, and memory-rich agent workflows—ranging from tool-using bots to collaborative agent teams.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : Agno + community

⭐ GitHub stars : 29k+

⚙️ Features :

Model-agnostic and adaptable to diverse reasoning engines

Advanced agentic memory with support for vector DBs, knowledge, and history

Supports both individual agents and collaborative multi-agent teams

Integration of tools like web scraping, browsers, APIs, and more (including native Bright Data tools)

Multi-modal support for rich inputs/outputs

Pluggable agent search and RAG pipelines

Full state/session management, custom workflows, and advanced control

High performance, observability, and flexibility

🧠 Supported models : OpenAI, Gemini, Anthropic, Llama, Hugging Face, Cohere, Google, and more

CrewAI

CrewAI

CrewAI is a lean, lightning-fast Python framework built entirely from scratch. Compared to other AI agent frameworks on this list, it is completely independent of LangChain or any other agent tools. It gives developers both high-level simplicity and fine-grained control, making it ideal for building autonomous AI agents customized for any use case.

The two main concepts in CrewAI are:

Crews : Designed for autonomy and collaborative intelligence, allowing you to build AI teams where each agent has defined roles, tools, and goals.

Flows : Provide event-driven, granular control and enable single-LLM-call orchestration. Flows integrate with Crews for precise execution.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : CrewAI + community

⭐ GitHub stars : 30k+

⚙️ Features :

Possibility to build standalone AI agents

Flexibility to orchestrate autonomous agents

Ability to combine autonomy and precision for real-world scenarios

Option to customize every layer of the system, from high-level workflows to low-level internal prompts and agent behaviors

Reliable performance across both simple and complex enterprise-level tasks

Capability to create powerful, adaptable, and production-ready AI automations with ease

🧠 Supported models : OpenAI, Anthropic, Google, Azure OpenAI, AWS, Cohere, VoyageAI, Hugging Face, Ollama, Mistral AI, Replicate, Together AI, AI21, Cloudflare Workers AI, DeepInfra, Groq, SambaNova, NVIDIA, and more

PydanticAI

PydanticAI

PydanticAI is a Python framework for building production-grade generative AI applications. Created by the Pydantic team, it is model-agnostic and supports real-time debugging. It also offers features like type safety, structured responses, dependency injection, and graph support. Its main goal is to help AI app development through familiar Python tools and best practices.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : Pydantic team + community

⭐ GitHub stars : 8.4k+

⚙️ Features :

Model-agnostic, with built-in support for multiple AI model proxies

Pydantic Logfire integration for real-time debugging, performance monitoring, and behavioral tracking of LLM-powered apps

Type-safety for type checking and static analysis using Pydantic models

Python-centric design for ergonomic GenAI development

Structured responses, using consistent and validated outputs with Pydantic models

Optional dependency injection system to inject data, tools, and validators into agents

Support for continuous streaming and on-the-fly validation

Graph support through Pydantic Graph

Output validation with automatic retries on schema mismatch

Support for asynchronous agent execution and tool invocation

🧠 Supported models : OpenAI, Anthropic, Gemini, Ollama, Groq, Mistral, Cohere, and Bedrock

Semantic Kernel

Semantic Kernel

Semantic Kernel is an open-source SDK from Microsoft for building AI agents and multi-agent systems. It integrates with several AI providers, including OpenAI, Azure, Hugging Face, and more. It supports flexible orchestration, plugin integration, and local or cloud deployment in Python, .NET, and Java. As an AI agent framework, it is ideal for enterprise-grade AI apps.

🔗 Repository : GitHub

💻 Programming language : Python, .NET, Java

👨💻 Developed by : Microsoft

⭐ GitHub stars : 24k+

⚙️ Features :

Possibility to connect to any LLM, with built-in support for OpenAI, Azure OpenAI, Hugging Face, NVIDIA, and more

Capability to build modular AI agents with access to tools, plugins, memory, and planning features

Support for orchestrating complex workflows with collaborating specialist agents in multi-agent systems

Ability to extend with native code functions, prompt templates, OpenAPI specs, or MCP

Integration with vector databases like Azure AI Search, Elasticsearch, Chroma, and more

Support for processing text, vision, and audio inputs with multimodal capabilities

Possibility for local deployment using Ollama, LMStudio, or ONNX

Capability to model complex business processes with a structured workflow approach

Built for observability, security, and stable APIs, ensuring enterprise readiness

🧠 Supported models : Amazon AI, Azure AI, Azure OpenAI, Google models, Hugging Face, Mistral AI, Ollama, Onnx, OpenAI, Hugging Face, NVIDIA, and others

Letta

Letta

Letta (formerly known as MemGPT) is an open-source framework for building stateful LLM applications. It supports the development of agents with advanced reasoning abilities and transparent, long-term memory. Letta is a white-box, model-agnostic framework, giving you full control over how agents function and learn over time. The library is available in both Python and Node.js.

🔗 Repository : GitHub

💻 Programming language : Python, TypeScript

👨💻 Developed by : Letta + community

⭐ GitHub stars : 15.9k+

⚙️ Features :

Possibility to build and monitor agents using an integrated development environment with a visual UI

Availability of Python SDK, TypeScript SDK, and REST API for flexible integration

Capability to manage agent memory for more context-aware interactions

Support for persistence by storing all agent state in a database

Ability to call and execute both custom and pre-built tools

Option to define tool usage rules by constraining actions in a graph-like structure

Support for streaming outputs for real-time interactions

Native support for multi-agent systems and multi-user collaboration

Compatibility with both closed-source models and open-source providers

Possibility to deploy in production using Docker or Letta Cloud for scalability

🧠 Supported models : OpenAI, Anthropic, DeepSeek, AWS Bedrock, Groq, xAI (Grok), Together, Gemini, Google Vertex, Azure OpenAI, Ollama, LM Studio, vLLM, and others

Rasa

Rasa

Rasa is an open-source machine learning framework for automating text- and voice-based conversations. It gives you what you need to build contextual chatbots and voice assistants that integrate with platforms like Slack, Facebook Messenger, Telegram, Alexa, and Google Home. It supports scalable, context-driven interactions for more meaningful conversations with AI agents.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : Rasa + community

⭐ GitHub stars : 20k+

⚙️ Features :

Understands user input, identifies intents, and extracts relevant information through NLU capabilities

Manages conversation flow and handles complex scenarios with accurate responses

No-code, drag-and-drop UI for building, testing, and refining conversational AI apps

Connects with messaging channels, third-party systems, and tools for flexible experiences

Available as a free open-source framework and a feature-rich Pro version

Support enterprise features for security, analytics, and team collaboration

🧠 Supported models : OpenAI, Cohere, Vertex AI, Hugging Face, Llama

Flowise

Flowise

Flowise is an open-source, low-code tool for building custom LLM orchestration flows and AI agents. It comes with an intuitive drag-and-drop UI for rapid development and iteration of complex workflows. Flowise automates repetitive tasks, integrates data sources, and makes it easier to create sophisticated AI-powered systems. Its goal is to accelerate the transition from testing to production.

🔗 Repository : GitHub

💻 Programming language : TypeScript, Python

👨💻 Developed by : Flowise + community

⭐ GitHub stars : 37.2k+

⚙️ Features :

No-code interface with drag-and-drop UI, accessible to non-technical users

Leverages the LangChain framework for flexible AI component integration

Pre-built components including language models, data sources, and processing modules

Dynamic input variables for adaptable AI applications

Easy fine-tuning of language models with custom data

Grouping components into reusable, higher-level modules

Native integration with cloud services, databases, and other AI frameworks

Deployment options on cloud platforms or integration into existing applications

Scalable and reliable for both prototypes and large-scale deployments

Rapid prototyping for quick iteration on AI projects

Pre-configured VM setup for AWS, Azure, and Google Cloud

🧠 Supported models : AWS Bedrock, Azure OpenAI, NIBittensorLLM, Cohere, Google PaLM, Google Vertex AI, Hugging Face Inference, Ollama, OpenAI, Replicate, NVIDIA, Anthropic, Mistral, IBM Watsonx, Together, Groq

ChatDev

ChatDev

ChatDev is an open-source framework that uses multi-agent collaboration to automate software development. It simulates a virtual software company using specialized AI agents powered by LLMs. These work together across various stages of the software development lifecycle—design, coding, testing, and documentation. By applying AI to the “waterfall” model, it enhances development with collaborative agents dedicated to specific tasks.

🔗 Repository : GitHub

💻 Programming language : Python

👨💻 Developed by : OpenBMB community

⭐ GitHub stars : 26.7k

⚙️ Features :

Follows the traditional waterfall model across design, development, testing, and documentation stages

Leverages inception prompting to define agent behavior and maintain role fidelity

Assigns agents to roles like CEO, CTO, engineer, designer, tester, and reviewer

Breaks down tasks into subtasks with defined entry and exit conditions

Uses a dual-agent design to simplify collaboration and decision-making

Supports both natural language and code-based communication between agents

Automates code writing, reviewing, testing, and documentation generation

Models teamwork using principles like constraints, commitments, and dynamic environments

Coordinates agents using a mixture-of-experts approach for efficient problem solving

Provides role-based prompts and communication protocols to enforce constraints

Allows agents to reverse roles temporarily to ask clarifying questions

🧠 Supported models : GTP-3.5-turbo, GTP-4, GTP-4-32k

Top Frameworks for Developing AI Agents: Summary Table

Here is a summary table to quickly compare the best frameworks for building AI agents:

| AI Agent Framework | Category | Programming Languages | GitHub Stars | Developed By | Premium Features Available | Supported AI Providers |

|---|---|---|---|---|---|---|

| AutoGen | Multi-agent AI system | Python, .NET | 43.1k+ | Microsoft | ❌ | OpenAI, Azure OpenAI, Azure AI Foundry, Anthropic, Ollama, etc. |

| LangChain | Modular Python AI framework | Python | 106k+ | Community | ✔️ | OpenAI, Google, Hugging Face, Azure, AWS, etc. |

| OpenAI Agents SDK | OpenAI SDK for multi-agent workflows | Python | 8.6k+ | OpenAI | ❌ | OpenAI |

| Langflow | Low-code, visual AI workflow builder | Python | 54.9k+ | Community | ❌ | Amazon Bedrock, Anthropic, Azure OpenAI, Cohere, Google, etc. |

| LlamaIndex | Framework for indexing and managing data for AI agents | Python | 40.9k+ | Community | ✔️ | OpenAI, Hugging Face, Azure OpenAI, Cohere, Google, etc. |

| Agno | Full-stack, multi-agent AI framework | Python | 29k+ | Agno + Community | ❌ | OpenAI, Gemini, Anthropic, Llama, Hugging Face, Cohere, Google, etc. |

| CrewAI | Autonomous AI agent framework | Python | 30k+ | CrewAI + Community | ✔️ | OpenAI, Anthropic, Google, Azure OpenAI, AWS, etc. |

| PydanticAI | Framework for generative AI apps | Python | 8.4k+ | Pydantic team + Community | ✔️ | OpenAI, Anthropic, Gemini, Ollama, Groq, etc. |

| Semantic Kernel | Enterprise-ready SDK for AI agent systems | Python, .NET, Java | 24k+ | Microsoft | ❌ | Amazon AI, Azure AI, Azure OpenAI, Google models, Hugging Face, etc. |

| Letta | Stateful LLM agent framework | Python, TypeScript | 15.9k+ | Letta + Community | ✔️ | OpenAI, Anthropic, DeepSeek, AWS Bedrock, Groq, etc. |

| Rasa | Framework for building AI chatbots and agents | Python | 20k+ | Rasa + community | ✔️ | OpenAI, Cohere, Hugging Face, Llama, etc. |

| Flowise | Low-code AI agent framework | Python | 7.2k+ | Flowise AI Community | ✔️ | OpenAI, AWS Bedrock, Azure OpenAI, Groq, etc. |

| ChatDev | Framework for multi-agent collaboration for development | Python | 2.1k+ | ChatDev | ✔️ | GTP-3.5-turbo, GTP-4, GTP-4-32k |

Other honorable mentions that did not make the list of AI agent frameworks are:

Botpress : A platform for building AI agents with LLMs, offering enterprise-grade scalability, security, and integrations.

LangGraph : A reasoning-based framework enabling graph workflows and multi-agent collaboration; it is part of LangChain’s ecosystem. It is part of LangChain.

Lyzr : A full-stack framework for autonomous AI agents, focusing on enterprise solutions and workflow automation.

Crawl4AI : An open-source tool for AI-powered web crawling and data extraction. See how to use Crawl4AI with DeepSeek to build an AI scraping agent .

Stagehand : A lightweight framework for task-based AI agents that simplifies process automation and supports modular agent design.

Browser Use : A browser automation tool that integrates with AI agents to simulate human-like interactions for tasks like web scraping or testing.

Conclusion

In this article, you learned what an AI agent framework is and understood the key factors to consider when choosing one. Using these criteria, we listed the best tools available today for building AI agents.

No matter which AI agent library you choose, developing an agent without access to data is nearly impossible. Fortunately, Bright Data—the world’s leading data provider—has you covered!

Equip your AI agent with access to the Web through cutting-edge services such as:

Autonomous AI agents : Search, access, and interact with any website in real-time using a powerful set of APIs.

Vertical AI apps : Build reliable, custom data pipelines to extract web data from industry-specific sources.

Foundation models : Access compliant, web-scale datasets to power pre-training, evaluation, and fine-tuning.

Multimodal AI : Tap into the world’s largest repository of images, videos, and audio—optimized for AI.

Data providers : Connect with trusted providers to source high-quality, AI-ready datasets at scale.

Data packages : Get curated, ready-to-use datasets—structured, enriched, and annotated.

For more information, take a look at our AI products .

Create a Bright Data account and try all our products and services for AI agent development!